8. Case study: Generalised bootstrapping for a homonuclear diatomic scattering system, \(N_2~(D_{\infty h})\)#

In this chapter, the full code and analysis details of the case study for \(N_2\) are given, including obtaining required data, running fits and analysis routines. For more details on the routines, see the PEMtk documentation [20]; for the analysis see particularly the fit fidelity and analysis page, and molecular frame analysis data processing page (full analysis for Ref. [3], illustrating the \(N_2\) case).

8.1. General setup#

In the following code cells (see source notebooks for full details) the general setup routines (as per the outline in Chpt. 7 are executed via a configuration script with presets for the case studies herein.

Additionally, the routines will either run fits, or load existing data if available. Since fitting can be computationally demanding, it is, in general, recommended to approach large fitting problems carefully.

# Configure settings for case study

# Set case study by name

fitSystem='N2'

fitStem=f"fit_withNoise_orb5"

# Add noise?

addNoise = 'y'

mu, sigma = 0, 0.05 # Up to approx 10% noise (+/- 0.05)

# Batching - number of fits to run between data dumps

batchSize = 10

# Total fits to run

nMax = 10

Show code cell content

# Run default config - may need to set full path here

%run '../scripts/setup_notebook_caseStudies_Mod-300723.py' # Test version with different figure options.

# %run '../scripts/setup_notebook.py'

# Set outputs for notebook or PDF (skips Holoviews plots unless glued)

# Note this is set to default 'pl' in script above

if buildEnv == 'pdf':

paramPlotBackend = 'sns' # For category plots with paramPlot

else:

paramPlotBackend = 'hv'

# plotBackend = 'sns' # For category plots with paramPlot

*** Setting up notebook with standard Quantum Metrology Vol. 3 imports...

For more details see https://pemtk.readthedocs.io/en/latest/fitting/PEMtk_fitting_basic_demo_030621-full.html

To use local source code, pass the parent path to this script at run time, e.g. "setup_fit_demo ~/github"

*** Running: 2023-12-07 10:49:15

Working dir: /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2

Build env: html

None

* Loading packages...

* sparse not found, sparse matrix forms not available.

* natsort not found, some sorting functions not available.

* Setting plotter defaults with epsproc.basicPlotters.setPlotters(). Run directly to modify, or change options in local env.

* Set Holoviews with bokeh.

* pyevtk not found, VTK export not available.

* Set Holoviews with bokeh.

Jupyter Book : 0.15.1

External ToC : 0.3.1

MyST-Parser : 0.18.1

MyST-NB : 0.17.2

Sphinx Book Theme : 1.0.1

Jupyter-Cache : 0.6.1

NbClient : 0.7.4

# Pull data from web (N2 case)

from epsproc.util.io import getFilesFromGithub

# Set dataName (will be used as download subdir)

dataName = 'n2fitting'

# N2 matrix elements

fDictMatE, fAllMatE = getFilesFromGithub(subpath='data/photoionization/n2_multiorb', dataName=dataName)

# N2 alignment data

fDictADM, fAllMatADM = getFilesFromGithub(subpath='data/alignment', dataName=dataName)

Show code cell output

Querying URL: https://api.github.com/repos/phockett/epsproc/contents/data/photoionization/n2_multiorb

Local file /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/n2_1pu_0.1-50.1eV_A2.inp.out already exists

Local file /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/n2_3sg_0.1-50.1eV_A2.inp.out already exists

Querying URL: https://api.github.com/repos/phockett/epsproc/contents/data/alignment

Local file /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/N2_ADM_VM_290816.mat already exists

# Fitting setup including data generation and parameter creation

# Set datapath,

dataPath = Path(Path.cwd(),dataName)

# Run general config script with dataPath set above

%run "../scripts/setup_fit_case-studies_270723.py" -d {dataPath} -c {fitSystem} -n {addNoise} --sigma {sigma}

Show code cell output

*** Setting up demo fitting workspace and main `data` class object...

Script: QM3 case studies

For more details see https://pemtk.readthedocs.io/en/latest/fitting/PEMtk_fitting_basic_demo_030621-full.html

To use local source code, pass the parent path to this script at run time, e.g. "setup_fit_demo ~/github"

* Loading packages...

* Loading demo matrix element data from /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting

*** Job subset details

Key: subset

No 'job' info set for self.data[subset].

*** Job orb6 details

Key: orb6

Dir /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting, 1 file(s).

{ 'batch': 'ePS n2, batch n2_1pu_0.1-50.1eV, orbital A2',

'event': ' N2 A-state (1piu-1)',

'orbE': -17.09691397835426,

'orbLabel': '1piu-1'}

*** Job orb5 details

Key: orb5

Dir /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting, 1 file(s).

{ 'batch': 'ePS n2, batch n2_3sg_0.1-50.1eV, orbital A2',

'event': ' N2 X-state (3sg-1)',

'orbE': -17.34181645456815,

'orbLabel': '3sg-1'}

*** Job subset details

Key: subset

No 'job' info set for self.data[subset].

*** Job orb6 details

Key: orb6

Dir /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting, 1 file(s).

{ 'batch': 'ePS n2, batch n2_1pu_0.1-50.1eV, orbital A2',

'event': ' N2 A-state (1piu-1)',

'orbE': -17.09691397835426,

'orbLabel': '1piu-1'}

*** Job orb5 details

Key: orb5

Dir /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting, 1 file(s).

{ 'batch': 'ePS n2, batch n2_3sg_0.1-50.1eV, orbital A2',

'event': ' N2 X-state (3sg-1)',

'orbE': -17.34181645456815,

'orbLabel': '3sg-1'}

*** Running with default N2 settings.

* Loading demo ADM data from file /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/N2_ADM_VM_290816.mat...

* Subselecting data...

*** Setting for N2 case study.

Subselected from dataset 'orb5', dataType 'matE': 36 from 11016 points (0.33%)

Subselected from dataset 'pol', dataType 'pol': 1 from 3 points (33.33%)

Subselected from dataset 'ADM', dataType 'ADM': 52 from 14764 points (0.35%)

* Calculating AF-BLMs...

Running for 13 t-points

Subselected from dataset 'sim', dataType 'AFBLM': 52 from 52 points (100.00%)

*Setting up fit parameters (with constraints)...

Set 6 complex matrix elements to 12 fitting params, see self.params for details.

Auto-setting parameters.

Basis set size = 12 params. Fitting with 4 magnitudes and 3 phases floated.

*** Adding Gaussian noise, mu=0, sigma=0.05

*** Setup demo fitting workspace OK.

Dataset: subset, AFBLM

Dataset: sim, AFBLM

| name | value | initial value | min | max | vary | expression |

|---|---|---|---|---|---|---|

| m_PU_SG_PU_1_n1_1_1 | 1.78461575 | 1.784615753610107 | 1.0000e-04 | 5.00000000 | True | |

| m_PU_SG_PU_1_1_n1_1 | 1.78461575 | 1.784615753610107 | 1.0000e-04 | 5.00000000 | False | m_PU_SG_PU_1_n1_1_1 |

| m_PU_SG_PU_3_n1_1_1 | 0.80290495 | 0.802904951323892 | 1.0000e-04 | 5.00000000 | True | |

| m_PU_SG_PU_3_1_n1_1 | 0.80290495 | 0.802904951323892 | 1.0000e-04 | 5.00000000 | False | m_PU_SG_PU_3_n1_1_1 |

| m_SU_SG_SU_1_0_0_1 | 2.68606212 | 2.686062120382649 | 1.0000e-04 | 5.00000000 | True | |

| m_SU_SG_SU_3_0_0_1 | 1.10915311 | 1.109153108617096 | 1.0000e-04 | 5.00000000 | True | |

| p_PU_SG_PU_1_n1_1_1 | -0.86104140 | -0.8610414024232179 | -3.14159265 | 3.14159265 | False | |

| p_PU_SG_PU_1_1_n1_1 | -0.86104140 | -0.8610414024232179 | -3.14159265 | 3.14159265 | False | p_PU_SG_PU_1_n1_1_1 |

| p_PU_SG_PU_3_n1_1_1 | -3.12044446 | -3.1204444620772467 | -3.14159265 | 3.14159265 | True | |

| p_PU_SG_PU_3_1_n1_1 | -3.12044446 | -3.1204444620772467 | -3.14159265 | 3.14159265 | False | p_PU_SG_PU_3_n1_1_1 |

| p_SU_SG_SU_1_0_0_1 | 2.61122920 | 2.611229196458127 | -3.14159265 | 3.14159265 | True | |

| p_SU_SG_SU_3_0_0_1 | -0.07867828 | -0.07867827542158025 | -3.14159265 | 3.14159265 | True |

8.2. Load existing fit data or run fits#

Note that running fits may be quite time-consuming and computationally intensive, depending on the size of the size of the problem. The default case here will run a small batch for testing if there is no existing data found on the dataPath, otherwise the data is loaded for analysis.

# Look for existing Pickle files on path?

# dataFiles = list(dataPath.expanduser().glob('*.pickle'))

dataFiles = [Path(dataPath.expanduser(), 'N2_1199_fit_withNoise_orb5_280723_11-39-26.pickle')] # Set reference dataset(s)

if not dataFiles:

print("No data found, executing minimal fitting run...")

# Run fit batch - single

# data.multiFit(nRange = [n,n+batchSize-1], num_workers=batchSize)

# Run fit batches with checkpoint files

for n in np.arange(0,nMax,batchSize):

print(f'*** Running batch [{n},{n+batchSize-1}], {dt.now().strftime("%d%m%y_%H-%M-%S")}')

# Run fit batch

data.multiFit(nRange = [n,n+batchSize-1], num_workers=batchSize)

# Dump data so far

data.writeFitData(outStem=f"{fitSystem}_{n+batchSize-1}_{fitStem}")

print(f'Finished batch [{n},{n+batchSize-1}], {dt.now().strftime("%d%m%y_%H-%M-%S")}')

print(f'Written to file {fitSystem}_{n+batchSize-1}_{fitStem}')

else:

dataFileIn = dataFiles[-1] # Add index to select file, although loadFitData will concat multiple files

# Note that concat currently only works for fixed batch sizes however.

print(f"Set dataFiles: {dataFileIn}")

data.loadFitData(fList=dataFileIn, dataPath=dataPath) #.expanduser())

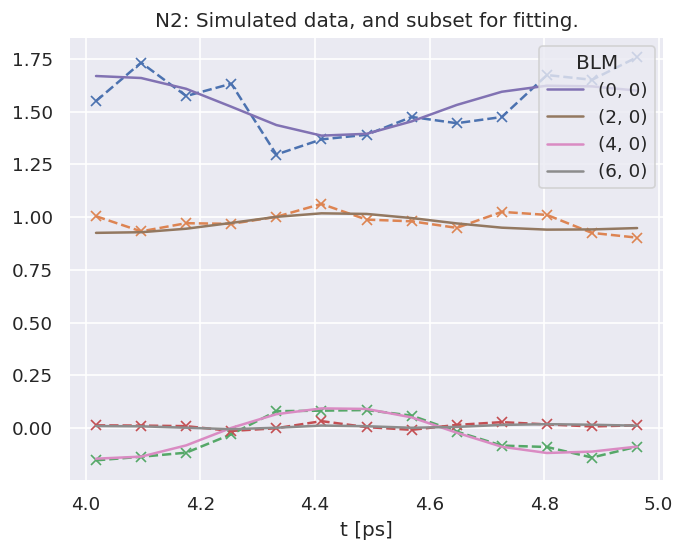

data.BLMfitPlot(keys=['subset','sim'])

Set dataFiles: /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/N2_1199_fit_withNoise_orb5_280723_11-39-26.pickle

Read data from /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/N2_1199_fit_withNoise_orb5_280723_11-39-26.pickle with pickle.

Dataset: subset, AFBLM

Dataset: sim, AFBLM

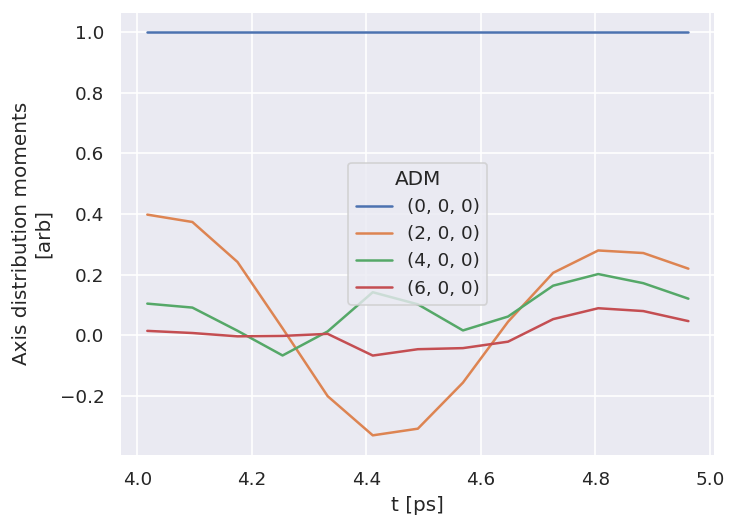

# Check ADMs

# Basic plotter

data.ADMplot(keys = 'subset')

Dataset: subset, ADM

Show code cell content

# Check ADMs

# Holoviews

data.data['subset']['ADM'].unstack().where(data.data['subset']['ADM'].unstack().K>0) \

.real.hvplot.line(x='t').overlay(['K','Q','S'])

# Fits appear as integer indexed items in the main data structure.

data.data.keys()

Show code cell output

dict_keys(['subset', 'orb6', 'orb5', 'ADM', 'pol', 'sim', 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 160, 161, 162, 163, 164, 165, 166, 167, 168, 169, 170, 171, 172, 173, 174, 175, 176, 177, 178, 179, 180, 181, 182, 183, 184, 185, 186, 187, 188, 189, 190, 191, 192, 193, 194, 195, 196, 197, 198, 200, 201, 202, 203, 204, 205, 206, 207, 208, 209, 210, 211, 212, 213, 214, 215, 216, 217, 218, 219, 220, 221, 222, 223, 224, 225, 226, 227, 228, 229, 230, 231, 232, 233, 234, 235, 236, 237, 238, 240, 241, 242, 243, 244, 245, 246, 247, 248, 249, 250, 251, 252, 253, 254, 255, 256, 257, 258, 259, 260, 261, 262, 263, 264, 265, 266, 267, 268, 269, 270, 271, 272, 273, 274, 275, 276, 277, 278, 280, 281, 282, 283, 284, 285, 286, 287, 288, 289, 290, 291, 292, 293, 294, 295, 296, 297, 298, 299, 300, 301, 302, 303, 304, 305, 306, 307, 308, 309, 310, 311, 312, 313, 314, 315, 316, 317, 318, 320, 321, 322, 323, 324, 325, 326, 327, 328, 329, 330, 331, 332, 333, 334, 335, 336, 337, 338, 339, 340, 341, 342, 343, 344, 345, 346, 347, 348, 349, 350, 351, 352, 353, 354, 355, 356, 357, 358, 360, 361, 362, 363, 364, 365, 366, 367, 368, 369, 370, 371, 372, 373, 374, 375, 376, 377, 378, 379, 380, 381, 382, 383, 384, 385, 386, 387, 388, 389, 390, 391, 392, 393, 394, 395, 396, 397, 398, 400, 401, 402, 403, 404, 405, 406, 407, 408, 409, 410, 411, 412, 413, 414, 415, 416, 417, 418, 419, 420, 421, 422, 423, 424, 425, 426, 427, 428, 429, 430, 431, 432, 433, 434, 435, 436, 437, 438, 440, 441, 442, 443, 444, 445, 446, 447, 448, 449, 450, 451, 452, 453, 454, 455, 456, 457, 458, 459, 460, 461, 462, 463, 464, 465, 466, 467, 468, 469, 470, 471, 472, 473, 474, 475, 476, 477, 478, 480, 481, 482, 483, 484, 485, 486, 487, 488, 489, 490, 491, 492, 493, 494, 495, 496, 497, 498, 499, 500, 501, 502, 503, 504, 505, 506, 507, 508, 509, 510, 511, 512, 513, 514, 515, 516, 517, 518, 520, 521, 522, 523, 524, 525, 526, 527, 528, 529, 530, 531, 532, 533, 534, 535, 536, 537, 538, 539, 540, 541, 542, 543, 544, 545, 546, 547, 548, 549, 550, 551, 552, 553, 554, 555, 556, 557, 558, 560, 561, 562, 563, 564, 565, 566, 567, 568, 569, 570, 571, 572, 573, 574, 575, 576, 577, 578, 579, 580, 581, 582, 583, 584, 585, 586, 587, 588, 589, 590, 591, 592, 593, 594, 595, 596, 597, 598, 600, 601, 602, 603, 604, 605, 606, 607, 608, 609, 610, 611, 612, 613, 614, 615, 616, 617, 618, 619, 620, 621, 622, 623, 624, 625, 626, 627, 628, 629, 630, 631, 632, 633, 634, 635, 636, 637, 638, 640, 641, 642, 643, 644, 645, 646, 647, 648, 649, 650, 651, 652, 653, 654, 655, 656, 657, 658, 659, 660, 661, 662, 663, 664, 665, 666, 667, 668, 669, 670, 671, 672, 673, 674, 675, 676, 677, 678, 680, 681, 682, 683, 684, 685, 686, 687, 688, 689, 690, 691, 692, 693, 694, 695, 696, 697, 698, 699, 700, 701, 702, 703, 704, 705, 706, 707, 708, 709, 710, 711, 712, 713, 714, 715, 716, 717, 718, 720, 721, 722, 723, 724, 725, 726, 727, 728, 729, 730, 731, 732, 733, 734, 735, 736, 737, 738, 739, 740, 741, 742, 743, 744, 745, 746, 747, 748, 749, 750, 751, 752, 753, 754, 755, 756, 757, 758, 760, 761, 762, 763, 764, 765, 766, 767, 768, 769, 770, 771, 772, 773, 774, 775, 776, 777, 778, 779, 780, 781, 782, 783, 784, 785, 786, 787, 788, 789, 790, 791, 792, 793, 794, 795, 796, 797, 798, 800, 801, 802, 803, 804, 805, 806, 807, 808, 809, 810, 811, 812, 813, 814, 815, 816, 817, 818, 819, 820, 821, 822, 823, 824, 825, 826, 827, 828, 829, 830, 831, 832, 833, 834, 835, 836, 837, 838, 840, 841, 842, 843, 844, 845, 846, 847, 848, 849, 850, 851, 852, 853, 854, 855, 856, 857, 858, 859, 860, 861, 862, 863, 864, 865, 866, 867, 868, 869, 870, 871, 872, 873, 874, 875, 876, 877, 878, 880, 881, 882, 883, 884, 885, 886, 887, 888, 889, 890, 891, 892, 893, 894, 895, 896, 897, 898, 899, 900, 901, 902, 903, 904, 905, 906, 907, 908, 909, 910, 911, 912, 913, 914, 915, 916, 917, 918, 920, 921, 922, 923, 924, 925, 926, 927, 928, 929, 930, 931, 932, 933, 934, 935, 936, 937, 938, 939, 940, 941, 942, 943, 944, 945, 946, 947, 948, 949, 950, 951, 952, 953, 954, 955, 956, 957, 958, 960, 961, 962, 963, 964, 965, 966, 967, 968, 969, 970, 971, 972, 973, 974, 975, 976, 977, 978, 979, 980, 981, 982, 983, 984, 985, 986, 987, 988, 989, 990, 991, 992, 993, 994, 995, 996, 997, 998, 1000, 1001, 1002, 1003, 1004, 1005, 1006, 1007, 1008, 1009, 1010, 1011, 1012, 1013, 1014, 1015, 1016, 1017, 1018, 1019, 1020, 1021, 1022, 1023, 1024, 1025, 1026, 1027, 1028, 1029, 1030, 1031, 1032, 1033, 1034, 1035, 1036, 1037, 1038, 1040, 1041, 1042, 1043, 1044, 1045, 1046, 1047, 1048, 1049, 1050, 1051, 1052, 1053, 1054, 1055, 1056, 1057, 1058, 1059, 1060, 1061, 1062, 1063, 1064, 1065, 1066, 1067, 1068, 1069, 1070, 1071, 1072, 1073, 1074, 1075, 1076, 1077, 1078, 1080, 1081, 1082, 1083, 1084, 1085, 1086, 1087, 1088, 1089, 1090, 1091, 1092, 1093, 1094, 1095, 1096, 1097, 1098, 1099, 1100, 1101, 1102, 1103, 1104, 1105, 1106, 1107, 1108, 1109, 1110, 1111, 1112, 1113, 1114, 1115, 1116, 1117, 1118, 1120, 1121, 1122, 1123, 1124, 1125, 1126, 1127, 1128, 1129, 1130, 1131, 1132, 1133, 1134, 1135, 1136, 1137, 1138, 1139, 1140, 1141, 1142, 1143, 1144, 1145, 1146, 1147, 1148, 1149, 1150, 1151, 1152, 1153, 1154, 1155, 1156, 1157, 1158, 1160, 1161, 1162, 1163, 1164, 1165, 1166, 1167, 1168, 1169, 1170, 1171, 1172, 1173, 1174, 1175, 1176, 1177, 1178, 1179, 1180, 1181, 1182, 1183, 1184, 1185, 1186, 1187, 1188, 1189, 1190, 1191, 1192, 1193, 1194, 1195, 1196, 1197, 1198])

8.3. Post-processing and data overview#

Post-processing involves aggregation of all the fit run results into a single data structure. This can then be analysed statistically and examined for for best-fit results. In the statistical sense, this is essentailly a search for candidate radial matrix elements, based on the assumption that some of the minima found in the \(\chi^2\) hyperspace will be the true results. Even if a clear global minima does not exist, searching for candidate radial matrix elements sets based on clustering of results and multiple local minima is still expected to lead to viable candidates provided that the information content of the dataset is sufficient. However, as discussed elsewhere (see Sect. 4.2), in some cases this may not be the case, and other limitations may apply (e.g. certain parameters may be undefined), or additional data required for unique determination of the radial matrix elements.

For more details on the analysis routines, see the PEMtk documentation [20], particularly the fit fidelity and analysis page, and molecular frame analysis data processing page (full analysis for Ref. [3], illustrating the \(N_2\) case).

# General stats & post-processing to data tables

data.analyseFits()

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

{ 'Fits': 1170,

'Minima': {'chisqr': 0.07137430989515783, 'redchi': 0.000479022214061462},

'Stats': { 'chisqr': min 0.071

mean 0.084

median 0.071

max 2.594

std 0.089

var 0.008

Name: chisqr, dtype: float64,

'redchi': min 4.790e-04

mean 5.652e-04

median 4.790e-04

max 1.741e-02

std 6.002e-04

var 3.602e-07

Name: redchi, dtype: float64},

'Success': 1169}

# The BLMsetPlot routine will output aggregate fit results.

# Here the spread can be taken as a general indication of the uncertainty of

# the fitting, and indicate whether the fit is well-characterised/the information

# content of the data is sufficient.

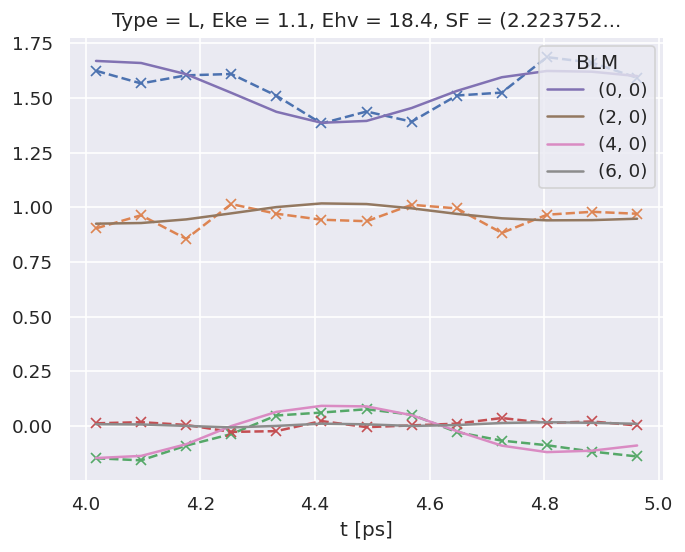

data.BLMsetPlot(xDim = 't', thres=1e-6) # With xDim and thres set, for more control over outputs

# Glue plot for later

glue("N2-fitResultsBLM",data.data['plots']['BLMsetPlot'])

Show code cell output

Fig. 8.1 Fit overview plot - \(\beta_{L,M}(t)\). Here dashed lines with ‘+’ markers indicates the input data, and bands indicate the mean fit results, where the width is the standard deviation in the fit model results. (See the PEMtk documentation [20] for details, particularly the analysis routines page.)#

# Write aggregate datasets to HDF5 format

# This is more robust than Pickled data, but PEMtk currently only support output for aggregate (post-processed) fit data.

data.processedToHDF5(dataPath = dataPath, outStem = dataFileIn.name, timeStamp=False)

Dumped self.data[fits][dfLong] to /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/N2_1199_fit_withNoise_orb5_280723_11-39-26.pickle_dfLong.pdHDF with Pandas .to_hdf() routine.

Dumped data to /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/N2_1199_fit_withNoise_orb5_280723_11-39-26.pickle_dfLong.pdHDF with pdHDF.

Dumped self.data[fits][AFxr] to /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/N2_1199_fit_withNoise_orb5_280723_11-39-26.pickle_AFxr.pdHDF with Pandas .to_hdf() routine.

Dumped data to /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part2/n2fitting/N2_1199_fit_withNoise_orb5_280723_11-39-26.pickle_AFxr.pdHDF with pdHDF.

# Histogram fit results (reduced chi^2 vs. fit index)

# This may be quite slow for large datasets, setting limited ranges may help

# Use default auto binning

# data.fitHist()

# Example with range set

data.fitHist(thres=1.5e-3, bins=100)

# Glue plot for later

glue("N2-fitHist",data.data['plots']['fitHistPlot'])

Show code cell output

Mask selected 1155 results (from 1170).

Fig. 8.2 Fit overview plot - \(\chi^2\) vs. fit index. Here bands indicate groupings (local minima) are consistently found.#

Here, Fig. 8.1 shows an overview of the results compared with the input data, and Fig. 8.2 an overview of \(\chi^2\) vs. fit index. Bands in the \(\chi^2\) dimension can indicate groupings (local minima) are consistently found. Assuming each grouping is a viable fit candidate parameter set, these can then be explored in further detail.

8.4. Data exploration#

The general aim in this procedure is to ascertain whether there was a good spread of parameters explored, and a single (or few sets) of best-fit results. There are a few procedures and helper methods for this…

8.4.1. View results#

Single results sets can be viewed in the main data structure, indexed by integers.

# Check keys

fitNumber = 2

data.data[fitNumber].keys()

dict_keys(['AFBLM', 'residual', 'results'])

Here results is an lmFit object, which includes final fit results and information, and AFBLM contains the model (fit) output (i.e. resultant AF-\(\beta_{LM}\) values).

An example is shown below. Of particular note here is which parameters have vary=True - these are included in the fitting - and if there is a column expression, which indicates any parameters defined to have specific relationships (see Chpt. 6). Any correlations found during fitting are also shown, which can also indicate parameters which are related (even if this is not predefined or known a priori).

# Show some results

data.data[fitNumber]['results']

Show code cell output

Fit Result

| fitting method | leastsq |

| # function evals | 499 |

| # data points | 156 |

| # variables | 7 |

| chi-square | 0.07259614 |

| reduced chi-square | 4.8722e-04 |

| Akaike info crit. | -1182.94113 |

| Bayesian info crit. | -1161.59214 |

| name | value | standard error | relative error | initial value | min | max | vary | expression |

|---|---|---|---|---|---|---|---|---|

| m_PU_SG_PU_1_n1_1_1 | 1.93822321 | 0.09004974 | (4.65%) | 0.0026613290483150642 | 1.0000e-04 | 5.00000000 | True | |

| m_PU_SG_PU_1_1_n1_1 | 1.93822321 | 0.09004974 | (4.65%) | 0.0026613290483150642 | 1.0000e-04 | 5.00000000 | False | m_PU_SG_PU_1_n1_1_1 |

| m_PU_SG_PU_3_n1_1_1 | 0.56897501 | 0.32588246 | (57.28%) | 0.9271179600873545 | 1.0000e-04 | 5.00000000 | True | |

| m_PU_SG_PU_3_1_n1_1 | 0.56897501 | 0.32588246 | (57.28%) | 0.9271179600873545 | 1.0000e-04 | 5.00000000 | False | m_PU_SG_PU_3_n1_1_1 |

| m_SU_SG_SU_1_0_0_1 | 2.28233546 | 0.37737228 | (16.53%) | 0.19665462847165494 | 1.0000e-04 | 5.00000000 | True | |

| m_SU_SG_SU_3_0_0_1 | 1.67057390 | 0.51264458 | (30.69%) | 0.32760832328728184 | 1.0000e-04 | 5.00000000 | True | |

| p_PU_SG_PU_1_n1_1_1 | -0.86104140 | 0.00000000 | (0.00%) | -0.8610414024232179 | -3.14159265 | 3.14159265 | False | |

| p_PU_SG_PU_1_1_n1_1 | -0.86104140 | 0.00000000 | (0.00%) | -0.8610414024232179 | -3.14159265 | 3.14159265 | False | p_PU_SG_PU_1_n1_1_1 |

| p_PU_SG_PU_3_n1_1_1 | -2.60134553 | 0.23781970 | (9.14%) | 0.5945585158325789 | -3.14159265 | 3.14159265 | True | |

| p_PU_SG_PU_3_1_n1_1 | -2.60134553 | 0.23781970 | (9.14%) | 0.5945585158325789 | -3.14159265 | 3.14159265 | False | p_PU_SG_PU_3_n1_1_1 |

| p_SU_SG_SU_1_0_0_1 | -3.14159265 | 0.01501433 | (0.48%) | 0.6426895985883777 | -3.14159265 | 3.14159265 | True | |

| p_SU_SG_SU_3_0_0_1 | -0.98055968 | 0.33998498 | (34.67%) | 0.042574135604000696 | -3.14159265 | 3.14159265 | True |

| Parameter1 | Parameter 2 | Correlation |

|---|---|---|

| m_SU_SG_SU_1_0_0_1 | m_SU_SG_SU_3_0_0_1 | -0.9939 |

| m_PU_SG_PU_1_n1_1_1 | m_PU_SG_PU_3_n1_1_1 | -0.9570 |

| m_PU_SG_PU_3_n1_1_1 | p_SU_SG_SU_3_0_0_1 | -0.9552 |

| m_PU_SG_PU_3_n1_1_1 | p_SU_SG_SU_1_0_0_1 | -0.9522 |

| m_PU_SG_PU_1_n1_1_1 | p_SU_SG_SU_1_0_0_1 | +0.9429 |

| m_PU_SG_PU_1_n1_1_1 | p_SU_SG_SU_3_0_0_1 | +0.9400 |

| p_SU_SG_SU_1_0_0_1 | p_SU_SG_SU_3_0_0_1 | +0.9195 |

| m_SU_SG_SU_1_0_0_1 | p_SU_SG_SU_3_0_0_1 | +0.6446 |

| m_SU_SG_SU_3_0_0_1 | p_SU_SG_SU_3_0_0_1 | -0.6202 |

| m_SU_SG_SU_1_0_0_1 | p_PU_SG_PU_3_n1_1_1 | -0.6175 |

| m_SU_SG_SU_3_0_0_1 | p_PU_SG_PU_3_n1_1_1 | +0.6004 |

| p_PU_SG_PU_3_n1_1_1 | p_SU_SG_SU_1_0_0_1 | -0.4348 |

| m_PU_SG_PU_3_n1_1_1 | m_SU_SG_SU_1_0_0_1 | -0.4180 |

| m_SU_SG_SU_1_0_0_1 | p_SU_SG_SU_1_0_0_1 | +0.4122 |

| m_PU_SG_PU_1_n1_1_1 | m_SU_SG_SU_1_0_0_1 | +0.4108 |

| m_PU_SG_PU_1_n1_1_1 | m_SU_SG_SU_3_0_0_1 | -0.4053 |

| p_PU_SG_PU_3_n1_1_1 | p_SU_SG_SU_3_0_0_1 | -0.4005 |

| m_SU_SG_SU_3_0_0_1 | p_SU_SG_SU_1_0_0_1 | -0.3883 |

| m_PU_SG_PU_3_n1_1_1 | m_SU_SG_SU_3_0_0_1 | +0.3822 |

| m_PU_SG_PU_3_n1_1_1 | p_PU_SG_PU_3_n1_1_1 | +0.2617 |

| m_PU_SG_PU_1_n1_1_1 | p_PU_SG_PU_3_n1_1_1 | -0.2164 |

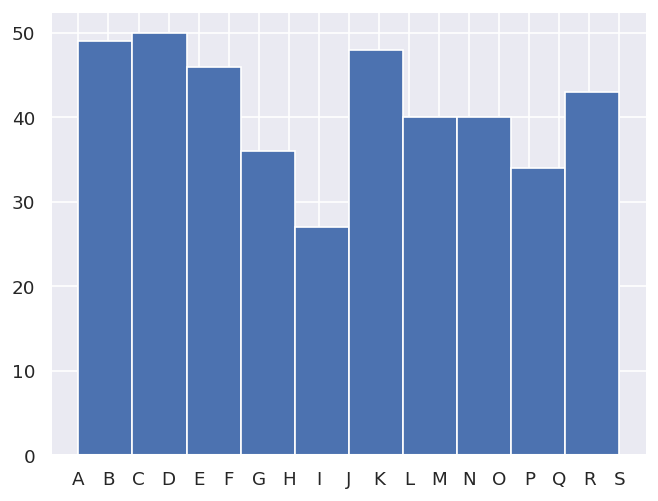

8.5. Classify candidate sets#

To probe the minima found, the classifyFits method can be used. This bins results into “candidate” groups, which can then be examined in detail.

# Run with defaults

# data.classifyFits()

# For more control, pass bins

# Here the minima is set at one end, and a %age range used for bins

minVal = data.fitsSummary['Stats']['redchi']['min']

binRangePC = 1e-8

data.classifyFits(bins = [minVal, minVal + binRangePC*minVal , 20])

| success | chisqr | redchi | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | unique | top | freq | count | unique | top | freq | count | unique | top | freq | |

| redchiGroup | ||||||||||||

| A | 31 | 1 | True | 31 | 31.0 | 31.0 | 0.071 | 1.0 | 31.0 | 31.0 | 4.790e-04 | 1.0 |

| B | 18 | 1 | True | 18 | 18.0 | 18.0 | 0.071 | 1.0 | 18.0 | 18.0 | 4.790e-04 | 1.0 |

| C | 25 | 1 | True | 25 | 25.0 | 25.0 | 0.071 | 1.0 | 25.0 | 25.0 | 4.790e-04 | 1.0 |

| D | 25 | 1 | True | 25 | 25.0 | 25.0 | 0.071 | 1.0 | 25.0 | 25.0 | 4.790e-04 | 1.0 |

| E | 25 | 1 | True | 25 | 25.0 | 25.0 | 0.071 | 1.0 | 25.0 | 25.0 | 4.790e-04 | 1.0 |

| F | 21 | 1 | True | 21 | 21.0 | 21.0 | 0.071 | 1.0 | 21.0 | 21.0 | 4.790e-04 | 1.0 |

| G | 17 | 1 | True | 17 | 17.0 | 17.0 | 0.071 | 1.0 | 17.0 | 17.0 | 4.790e-04 | 1.0 |

| H | 19 | 1 | True | 19 | 19.0 | 19.0 | 0.071 | 1.0 | 19.0 | 19.0 | 4.790e-04 | 1.0 |

| I | 27 | 1 | True | 27 | 27.0 | 27.0 | 0.071 | 1.0 | 27.0 | 27.0 | 4.790e-04 | 1.0 |

| J | 21 | 1 | True | 21 | 21.0 | 21.0 | 0.071 | 1.0 | 21.0 | 21.0 | 4.790e-04 | 1.0 |

| K | 27 | 1 | True | 27 | 27.0 | 27.0 | 0.071 | 1.0 | 27.0 | 27.0 | 4.790e-04 | 1.0 |

| L | 22 | 1 | True | 22 | 22.0 | 22.0 | 0.071 | 1.0 | 22.0 | 22.0 | 4.790e-04 | 1.0 |

| M | 18 | 1 | True | 18 | 18.0 | 18.0 | 0.071 | 1.0 | 18.0 | 18.0 | 4.790e-04 | 1.0 |

| N | 18 | 1 | True | 18 | 18.0 | 18.0 | 0.071 | 1.0 | 18.0 | 18.0 | 4.790e-04 | 1.0 |

| O | 22 | 1 | True | 22 | 22.0 | 22.0 | 0.071 | 1.0 | 22.0 | 22.0 | 4.790e-04 | 1.0 |

| P | 18 | 1 | True | 18 | 18.0 | 18.0 | 0.071 | 1.0 | 18.0 | 18.0 | 4.790e-04 | 1.0 |

| Q | 16 | 1 | True | 16 | 16.0 | 16.0 | 0.071 | 1.0 | 16.0 | 16.0 | 4.790e-04 | 1.0 |

| R | 20 | 1 | True | 20 | 20.0 | 20.0 | 0.071 | 1.0 | 20.0 | 20.0 | 4.790e-04 | 1.0 |

| S | 23 | 1 | True | 23 | 23.0 | 23.0 | 0.071 | 1.0 | 23.0 | 23.0 | 4.790e-04 | 1.0 |

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

8.6. Explore candidate result sets#

Drill-down on a candidate set of results, and examine values and spreads. For more details see PEMtk documentation [20], especially the analysis routines page. (See also Sect. 2.3 for details on the plotting libaries implemented here.)

8.6.1. Raw results#

Plot spreads in magnitude and phase parameters. Statistical plots are available for Seaborn and Holoviews backends, with some slightly different options.

# From the candidates, select a group for analysis

selGroup = 'A'

# paramPlot can be used to check the spread on each parameter.

# Plots use Seaborn or Holoviews/Bokeh

# Colour-mapping is controlled by the 'hue' paramter, additionally pass hRound for sig. fig control.

# The remap setting allows for short-hand labels as set in data.lmmu

paramType = 'm' # Set for (m)agnitude or (p)hase parameters

hRound = 14 # Set for cmapping, default may be too small (leads to all grey cmap on points)

data.paramPlot(selectors={'Type':paramType, 'redchiGroup':selGroup}, hue = 'redchi',

backend=paramPlotBackend, hvType='violin',

returnFlag = True, hRound=hRound, remap = 'lmMap');

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

paramType = 'p' # Set for (m)agnitude or (p)hase parameters

data.paramPlot(selectors={'Type':paramType, 'redchiGroup':selGroup}, hue = 'redchi', backend=paramPlotBackend, hvType='violin',

returnFlag = True, hRound=hRound, remap = 'lmMap');

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

8.6.2. Phases, phase shifts & corrections#

Depending on how the fit was configured, phases may be defined in different ways. To set the phases relative to a speific parameter, and wrap to a specified range, use the phaseCorrection() method. This defaults to using the first parameter as a reference phase, and wraps to \(-\pi:\pi\). The phase-corrected values are output to a new Type, ‘pc’, and a set of normalised magnitudes to ‘n’. Additional settings can be passed for more control, as shown below.

# Run phase correction routine

# Set absFlag=True for unsigned phases (mapped to 0:pi)

# Set useRef=False to set ref phase as 0, otherwise the reference value is set.

phaseCorrParams={'absFlag':True, 'useRef':False}

data.phaseCorrection(**phaseCorrParams)

Show code cell output

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

Set ref param = PU_SG_PU_1_1_n1_1

| Param | PU_SG_PU_1_1_n1_1 | PU_SG_PU_1_n1_1_1 | PU_SG_PU_3_1_n1_1 | PU_SG_PU_3_n1_1_1 | SU_SG_SU_1_0_0_1 | SU_SG_SU_3_0_0_1 | ||

|---|---|---|---|---|---|---|---|---|

| Fit | Type | redchiGroup | ||||||

| 1 | m | O | 1.815 | 1.815 | 0.896 | 0.896 | 2.473 | 1.361 |

| n | O | 0.451 | 0.451 | 0.223 | 0.223 | 0.615 | 0.338 | |

| p | O | -0.861 | -0.861 | 0.934 | 0.934 | 1.815 | -0.530 | |

| pc | O | 0.000 | 0.000 | 1.795 | 1.795 | 2.676 | 0.331 | |

| 5 | m | S | 1.815 | 1.815 | 0.896 | 0.896 | 2.473 | 1.362 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 1195 | pc | D | 0.000 | 0.000 | 1.794 | 1.794 | 2.675 | 0.331 |

| 1198 | m | H | 1.815 | 1.815 | 0.896 | 0.896 | 2.473 | 1.362 |

| n | H | 0.451 | 0.451 | 0.223 | 0.223 | 0.615 | 0.339 | |

| p | H | -0.861 | -0.861 | 0.933 | 0.933 | 1.814 | -0.530 | |

| pc | H | 0.000 | 0.000 | 1.794 | 1.794 | 2.675 | 0.331 |

1652 rows × 6 columns

Examine new data types…

paramType = 'n'

data.paramPlot(selectors={'Type':paramType, 'redchiGroup':selGroup}, hue = 'redchi',

backend=paramPlotBackend, hvType='violin', kind='box',

returnFlag = True, hRound=hRound, remap = 'lmMap');

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

WARNING:param.Scatter05565: Setting non-parameter attribute kind=box using a mechanism intended only for parameters

paramType = 'pc'

data.paramPlot(selectors={'Type':paramType, 'redchiGroup':selGroup}, hue = 'redchi',

backend=paramPlotBackend, hvType='violin', kind='box',

returnFlag = True, hRound=hRound, remap = 'lmMap');

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

WARNING:param.Scatter05895: Setting non-parameter attribute kind=box using a mechanism intended only for parameters

8.7. Parameter estimation & fidelity#

For case studies, the fit results can be directly compared to the known input parameters. This should give a feel for how well the data defines the matrix elements (parameters) in this case. In general, probing the correlations and spread of results, and comparing to other (unfitted) results is required to estimate fidelity, see Quantum Metrology Vols. 1 & 2 [4, 9] for further discussion.

8.7.1. Best values and statistics#

To get a final parameter set and associated statistics, based on a subset of the fit results, the paramsReport() method is available. If reference data is available, as for the case studies herein, the paramsCompare() method can also be used to compare with the reference case.

# Parameter summary

data.paramsReport(inds = {'redchiGroup':selGroup})

Show code cell output

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

Set parameter stats to self.paramsSummary.

| Type | m | n | p | pc | |

|---|---|---|---|---|---|

| Param | Agg | ||||

| PU_SG_PU_1_1_n1_1 | min | 1.815e+00 | 4.514e-01 | -0.861 | 0.000e+00 |

| mean | 1.815e+00 | 4.514e-01 | -0.861 | 0.000e+00 | |

| median | 1.815e+00 | 4.514e-01 | -0.861 | 0.000e+00 | |

| max | 1.815e+00 | 4.514e-01 | -0.861 | 0.000e+00 | |

| std | 1.853e-05 | 4.618e-06 | 0.000 | 0.000e+00 | |

| var | 3.434e-10 | 2.132e-11 | 0.000 | 0.000e+00 | |

| PU_SG_PU_1_n1_1_1 | min | 1.815e+00 | 4.514e-01 | -0.861 | 0.000e+00 |

| mean | 1.815e+00 | 4.514e-01 | -0.861 | 0.000e+00 | |

| median | 1.815e+00 | 4.514e-01 | -0.861 | 0.000e+00 | |

| max | 1.815e+00 | 4.514e-01 | -0.861 | 0.000e+00 | |

| std | 1.853e-05 | 4.618e-06 | 0.000 | 0.000e+00 | |

| var | 3.434e-10 | 2.132e-11 | 0.000 | 0.000e+00 | |

| PU_SG_PU_3_1_n1_1 | min | 8.959e-01 | 2.229e-01 | -2.656 | 1.794e+00 |

| mean | 8.959e-01 | 2.229e-01 | 0.123 | 1.794e+00 | |

| median | 8.959e-01 | 2.229e-01 | 0.933 | 1.794e+00 | |

| max | 8.960e-01 | 2.229e-01 | 0.933 | 1.794e+00 | |

| std | 3.921e-05 | 9.750e-06 | 1.525 | 1.928e-05 | |

| var | 1.538e-09 | 9.506e-11 | 2.327 | 3.715e-10 | |

| PU_SG_PU_3_n1_1_1 | min | 8.959e-01 | 2.229e-01 | -2.656 | 1.794e+00 |

| mean | 8.959e-01 | 2.229e-01 | 0.123 | 1.794e+00 | |

| median | 8.959e-01 | 2.229e-01 | 0.933 | 1.794e+00 | |

| max | 8.960e-01 | 2.229e-01 | 0.933 | 1.794e+00 | |

| std | 3.921e-05 | 9.750e-06 | 1.525 | 1.928e-05 | |

| var | 1.538e-09 | 9.506e-11 | 2.327 | 3.715e-10 | |

| SU_SG_SU_1_0_0_1 | min | 2.473e+00 | 6.151e-01 | 1.814 | 2.675e+00 |

| mean | 2.473e+00 | 6.152e-01 | 2.025 | 2.675e+00 | |

| median | 2.473e+00 | 6.152e-01 | 1.814 | 2.675e+00 | |

| max | 2.473e+00 | 6.152e-01 | 2.747 | 2.675e+00 | |

| std | 5.007e-05 | 1.243e-05 | 0.396 | 4.848e-05 | |

| var | 2.507e-09 | 1.546e-10 | 0.157 | 2.350e-09 | |

| SU_SG_SU_3_0_0_1 | min | 1.361e+00 | 3.386e-01 | -1.192 | 3.308e-01 |

| mean | 1.361e+00 | 3.386e-01 | -0.680 | 3.309e-01 | |

| median | 1.361e+00 | 3.386e-01 | -0.530 | 3.309e-01 | |

| max | 1.361e+00 | 3.386e-01 | -0.530 | 3.309e-01 | |

| std | 9.377e-05 | 2.334e-05 | 0.281 | 3.116e-05 | |

| var | 8.793e-09 | 5.446e-10 | 0.079 | 9.710e-10 |

# Parameter comparison

# Note this uses phaseCorrParams as set previously for consistency

data.paramsCompare(phaseCorrParams=phaseCorrParams)

Show code cell output

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

Set ref param = PU_SG_PU_1_1_n1_1

Set parameter comparison to self.paramsSummaryComp.

| Param | PU_SG_PU_1_1_n1_1 | PU_SG_PU_1_n1_1_1 | PU_SG_PU_3_1_n1_1 | PU_SG_PU_3_n1_1_1 | SU_SG_SU_1_0_0_1 | SU_SG_SU_3_0_0_1 | |

|---|---|---|---|---|---|---|---|

| Fit | Type | ||||||

| ref | m | 1.785 | 1.785 | 0.803 | 0.803 | 2.686 | 1.109 |

| n | 0.445 | 0.445 | 0.200 | 0.200 | 0.669 | 0.276 | |

| p | -0.861 | -0.861 | -3.120 | -3.120 | 2.611 | -0.079 | |

| pc | 0.000 | 0.000 | 2.259 | 2.259 | 2.811 | 0.782 |

| Param | PU_SG_PU_1_1_n1_1 | PU_SG_PU_1_n1_1_1 | PU_SG_PU_3_1_n1_1 | PU_SG_PU_3_n1_1_1 | SU_SG_SU_1_0_0_1 | SU_SG_SU_3_0_0_1 | ||

|---|---|---|---|---|---|---|---|---|

| Type | Source | dType | ||||||

| m | mean | num | 1.815e+00 | 1.815e+00 | 8.959e-01 | 8.959e-01 | 2.473e+00 | 1.361e+00 |

| ref | num | 1.785e+00 | 1.785e+00 | 8.029e-01 | 8.029e-01 | 2.686e+00 | 1.109e+00 | |

| diff | % | 1.662e+00 | 1.662e+00 | 1.038e+01 | 1.038e+01 | 8.617e+00 | 1.851e+01 | |

| num | 3.016e-02 | 3.016e-02 | 9.304e-02 | 9.304e-02 | -2.131e-01 | 2.520e-01 | ||

| std | % | 1.021e-03 | 1.021e-03 | 4.377e-03 | 4.377e-03 | 2.025e-03 | 6.889e-03 | |

| num | 1.853e-05 | 1.853e-05 | 3.921e-05 | 3.921e-05 | 5.007e-05 | 9.377e-05 | ||

| diff/std | % | 1.628e+05 | 1.628e+05 | 2.373e+05 | 2.373e+05 | 4.256e+05 | 2.688e+05 | |

| n | mean | num | 4.514e-01 | 4.514e-01 | 2.229e-01 | 2.229e-01 | 6.152e-01 | 3.386e-01 |

| ref | num | 4.447e-01 | 4.447e-01 | 2.001e-01 | 2.001e-01 | 6.693e-01 | 2.764e-01 | |

| diff | % | 1.490e+00 | 1.490e+00 | 1.023e+01 | 1.023e+01 | 8.807e+00 | 1.837e+01 | |

| num | 6.725e-03 | 6.725e-03 | 2.279e-02 | 2.279e-02 | -5.418e-02 | 6.221e-02 | ||

| std | % | 1.023e-03 | 1.023e-03 | 4.375e-03 | 4.375e-03 | 2.021e-03 | 6.892e-03 | |

| num | 4.618e-06 | 4.618e-06 | 9.750e-06 | 9.750e-06 | 1.243e-05 | 2.334e-05 | ||

| diff/std | % | 1.456e+05 | 1.456e+05 | 2.338e+05 | 2.338e+05 | 4.357e+05 | 2.666e+05 | |

| p | mean | num | -8.610e-01 | -8.610e-01 | 1.230e-01 | 1.230e-01 | 2.025e+00 | -6.796e-01 |

| ref | num | -8.610e-01 | -8.610e-01 | -3.120e+00 | -3.120e+00 | 2.611e+00 | -7.868e-02 | |

| diff | % | 0.000e+00 | 0.000e+00 | 2.637e+03 | 2.637e+03 | 2.896e+01 | 8.842e+01 | |

| num | 0.000e+00 | 0.000e+00 | 3.243e+00 | 3.243e+00 | -5.864e-01 | -6.009e-01 | ||

| std | % | 0.000e+00 | 0.000e+00 | 1.240e+03 | 1.240e+03 | 1.957e+01 | 4.139e+01 | |

| num | 0.000e+00 | 0.000e+00 | 1.525e+00 | 1.525e+00 | 3.963e-01 | 2.813e-01 | ||

| diff/std | % | NaN | NaN | 2.126e+02 | 2.126e+02 | 1.480e+02 | 2.136e+02 | |

| pc | mean | num | 0.000e+00 | 0.000e+00 | 1.794e+00 | 1.794e+00 | 2.675e+00 | 3.309e-01 |

| ref | num | 0.000e+00 | 0.000e+00 | 2.259e+00 | 2.259e+00 | 2.811e+00 | 7.824e-01 | |

| diff | % | NaN | NaN | 2.591e+01 | 2.591e+01 | 5.067e+00 | 1.364e+02 | |

| num | 0.000e+00 | 0.000e+00 | -4.649e-01 | -4.649e-01 | -1.356e-01 | -4.515e-01 | ||

| std | % | NaN | NaN | 1.074e-03 | 1.074e-03 | 1.812e-03 | 9.418e-03 | |

| num | 0.000e+00 | 0.000e+00 | 1.928e-05 | 1.928e-05 | 4.848e-05 | 3.116e-05 | ||

| diff/std | % | NaN | NaN | 2.412e+06 | 2.412e+06 | 2.796e+05 | 1.449e+06 |

# Display above results With column name remapping to (l,m) labels only

# With Pandas functionality

data.paramsSummaryComp.rename(columns=data.lmmu['lmMap'])

# With utility method

# summaryRenamed = pemtk.fit._util.renameParams(data.paramsSummaryComp, data.lmmu['lmMap'])

# summaryRenamed

| Param | 1,1 | 1,-1 | 3,1 | 3,-1 | 1,0 | 3,0 | ||

|---|---|---|---|---|---|---|---|---|

| Type | Source | dType | ||||||

| m | mean | num | 1.815e+00 | 1.815e+00 | 8.959e-01 | 8.959e-01 | 2.473e+00 | 1.361e+00 |

| ref | num | 1.785e+00 | 1.785e+00 | 8.029e-01 | 8.029e-01 | 2.686e+00 | 1.109e+00 | |

| diff | % | 1.662e+00 | 1.662e+00 | 1.038e+01 | 1.038e+01 | 8.617e+00 | 1.851e+01 | |

| num | 3.016e-02 | 3.016e-02 | 9.304e-02 | 9.304e-02 | -2.131e-01 | 2.520e-01 | ||

| std | % | 1.021e-03 | 1.021e-03 | 4.377e-03 | 4.377e-03 | 2.025e-03 | 6.889e-03 | |

| num | 1.853e-05 | 1.853e-05 | 3.921e-05 | 3.921e-05 | 5.007e-05 | 9.377e-05 | ||

| diff/std | % | 1.628e+05 | 1.628e+05 | 2.373e+05 | 2.373e+05 | 4.256e+05 | 2.688e+05 | |

| n | mean | num | 4.514e-01 | 4.514e-01 | 2.229e-01 | 2.229e-01 | 6.152e-01 | 3.386e-01 |

| ref | num | 4.447e-01 | 4.447e-01 | 2.001e-01 | 2.001e-01 | 6.693e-01 | 2.764e-01 | |

| diff | % | 1.490e+00 | 1.490e+00 | 1.023e+01 | 1.023e+01 | 8.807e+00 | 1.837e+01 | |

| num | 6.725e-03 | 6.725e-03 | 2.279e-02 | 2.279e-02 | -5.418e-02 | 6.221e-02 | ||

| std | % | 1.023e-03 | 1.023e-03 | 4.375e-03 | 4.375e-03 | 2.021e-03 | 6.892e-03 | |

| num | 4.618e-06 | 4.618e-06 | 9.750e-06 | 9.750e-06 | 1.243e-05 | 2.334e-05 | ||

| diff/std | % | 1.456e+05 | 1.456e+05 | 2.338e+05 | 2.338e+05 | 4.357e+05 | 2.666e+05 | |

| p | mean | num | -8.610e-01 | -8.610e-01 | 1.230e-01 | 1.230e-01 | 2.025e+00 | -6.796e-01 |

| ref | num | -8.610e-01 | -8.610e-01 | -3.120e+00 | -3.120e+00 | 2.611e+00 | -7.868e-02 | |

| diff | % | 0.000e+00 | 0.000e+00 | 2.637e+03 | 2.637e+03 | 2.896e+01 | 8.842e+01 | |

| num | 0.000e+00 | 0.000e+00 | 3.243e+00 | 3.243e+00 | -5.864e-01 | -6.009e-01 | ||

| std | % | 0.000e+00 | 0.000e+00 | 1.240e+03 | 1.240e+03 | 1.957e+01 | 4.139e+01 | |

| num | 0.000e+00 | 0.000e+00 | 1.525e+00 | 1.525e+00 | 3.963e-01 | 2.813e-01 | ||

| diff/std | % | NaN | NaN | 2.126e+02 | 2.126e+02 | 1.480e+02 | 2.136e+02 | |

| pc | mean | num | 0.000e+00 | 0.000e+00 | 1.794e+00 | 1.794e+00 | 2.675e+00 | 3.309e-01 |

| ref | num | 0.000e+00 | 0.000e+00 | 2.259e+00 | 2.259e+00 | 2.811e+00 | 7.824e-01 | |

| diff | % | NaN | NaN | 2.591e+01 | 2.591e+01 | 5.067e+00 | 1.364e+02 | |

| num | 0.000e+00 | 0.000e+00 | -4.649e-01 | -4.649e-01 | -1.356e-01 | -4.515e-01 | ||

| std | % | NaN | NaN | 1.074e-03 | 1.074e-03 | 1.812e-03 | 9.418e-03 | |

| num | 0.000e+00 | 0.000e+00 | 1.928e-05 | 1.928e-05 | 4.848e-05 | 3.116e-05 | ||

| diff/std | % | NaN | NaN | 2.412e+06 | 2.412e+06 | 2.796e+05 | 1.449e+06 |

Show code cell content

# Plot values vs. reference cases

# NOTE - experimental code, not yet consolidated and wrapped in PEMtk

paramType = 'm'

# Set new DataFrame including "vary" info (missing in default case)

pDict = 'dfWideTest'

# Try using existing function with extra index set...

data._setWide(indexDims = ['Fit','Type','chisqrGroup','redchiGroup','batch', 'vary'], dataWide='dfWideTest')

# WITH lmMAP remap - good if (l,m) are unique labels

plotData = data.paramPlot(dataDict = pDict, selectors={'vary':True, 'Type':paramType, 'redchiGroup':selGroup}, hue = 'chisqr',

backend='hv', hvType='violin', returnFlag = True, plotScatter=True, hRound=hRound, remap='lmMap')

# NO REMAP CASE

# plotData = data.paramPlot(dataDict = pDict, selectors={'vary':True, 'Type':paramType, 'redchiGroup':selGroup}, hue = 'chisqr',

# backend='hv', hvType='violin', returnFlag = True, plotScatter=True, hRound=hRound) #, remap='lmMap')

p1 = data.data['plots']['paramPlot']

# Plot ref params... CURRENTLY NOT IN paraPlot(), and that also expects fit data so can't reuse directly here.

dataTest = data.data['fits']['dfRef'].copy()

# data.paramPlot(dataDict = 'dfRef')

# Set axis remap

# dataTest.replace({'Param':data.lmmu['lmMap']}, inplace=True)

# Subset

dataTestSub = data._subsetFromXS(selectors = {'Type':paramType}, data = dataTest)

p2 = dataTestSub.hvplot.scatter(x='Param',y='value', marker='dash', size=500, color='red')

p1*p2

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

*** Warning: found MultiIndex for DataFrame data.index - checkDims may have issues with Pandas MultiIndex, but will try anyway.

8.8. Using the reconstructed matrix elements#

The results tables are accessible directly, and there are also methods to reformat the best fit results for use in further calculations.

# self.paramsSummary contains the results above as Pandas Dataframe, usual Pandas methods can be applied.

data.paramsSummary['data'].describe()

| Param | PU_SG_PU_1_1_n1_1 | PU_SG_PU_1_n1_1_1 | PU_SG_PU_3_1_n1_1 | PU_SG_PU_3_n1_1_1 | SU_SG_SU_1_0_0_1 | SU_SG_SU_3_0_0_1 |

|---|---|---|---|---|---|---|

| count | 124.000 | 124.000 | 124.000 | 124.000 | 124.000 | 124.000 |

| mean | 0.351 | 0.351 | 0.759 | 0.759 | 1.947 | 0.338 |

| std | 0.972 | 0.972 | 1.008 | 1.008 | 0.831 | 0.738 |

| min | -0.861 | -0.861 | -2.656 | -2.656 | 0.615 | -1.192 |

| 25% | -0.215 | -0.215 | 0.223 | 0.223 | 1.514 | 0.116 |

| 50% | 0.226 | 0.226 | 0.896 | 0.896 | 2.473 | 0.335 |

| 75% | 0.792 | 0.792 | 1.149 | 1.149 | 2.675 | 0.594 |

| max | 1.815 | 1.815 | 1.794 | 1.794 | 2.747 | 1.361 |

# To set matrix elements from aggregate fit results, use `seetAggMatE` for Pandas

data.setAggMatE(simpleForm = True)

data.data['agg']['matEpd']

Set reformatted aggregate data to self.data[agg][matEpd].

| Type | m | n | p | pc | comp | compC | labels | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Cont | l | m | mu | |||||||

| PU | 1 | -1 | 1 | 1.815 | 0.451 | -0.861 | 0.000 | 1.183-1.377j | 0.451+0.000j | 1,-1 |

| 1 | -1 | 1.815 | 0.451 | -0.861 | 0.000 | 1.183-1.377j | 0.451+0.000j | 1,1 | ||

| 3 | -1 | 1 | 0.896 | 0.223 | 0.123 | 1.794 | 0.889+0.110j | -0.049+0.217j | 3,-1 | |

| 1 | -1 | 0.896 | 0.223 | 0.123 | 1.794 | 0.889+0.110j | -0.049+0.217j | 3,1 | ||

| SU | 1 | 0 | 0 | 2.473 | 0.615 | 2.025 | 2.675 | -1.085+2.222j | -0.550+0.277j | 1,0 |

| 3 | 0 | 0 | 1.361 | 0.339 | -0.680 | 0.331 | 1.059-0.855j | 0.320+0.110j | 3,0 |

# To set matrix elements from aggregate fit results, use `aggToXR` for Xarray

# data.aggToXR(refKey = 'orb5', returnType = 'ds', conformDims=True) # use full ref dataset

data.aggToXR(refKey = 'subset', returnType = 'ds', conformDims=True) # Subselected matE

Added dim Total

Added dim Targ

Added dim Total

Added dim Targ

Set XR dataset for self.data['agg']['matE']

Show code cell content

data.data['agg']['matE']

<xarray.Dataset>

Dimensions: (Type: 2, Sym: 4, mu: 3, LM: 6, it: 1)

Coordinates:

* Type (Type) object 'comp' 'compC'

* Sym (Sym) MultiIndex

- Cont (Sym) object 'PU' 'PU' 'SU' 'SU'

- Targ (Sym) object 'SG' 'U' 'SG' 'U'

- Total (Sym) object 'PU' 'U' 'SU' 'U'

* mu (mu) int64 -1 0 1

* LM (LM) MultiIndex

- l (LM) int64 1 1 1 3 3 3

- m (LM) int64 -1 0 1 -1 0 1

* it (it) int64 1

Eke float64 1.1

Ehv float64 18.4

SF complex128 (2.2237521+3.6277801j)

Data variables:

comp (Type, mu, Sym, LM) complex128 (nan+nanj) (nan+nanj) ... (nan+nanj)

compC (Type, mu, Sym, LM) complex128 (nan+nanj) (nan+nanj) ... (nan+nanj)

subset (LM, Sym, mu, it) complex128 (nan+nanj) (nan+nanj) ... (nan+nanj)8.8.1. Density matrices#

New (experimental) code for density matrix plots and comparison. See Sect. 3.4 for discussion. Code adapted from the PEMtk documentation [20] MF reconstruction page, original analysis for Ref. [3], illustrating the \(N_2\) case. If the reconstruction is good, the differences (fidelity) should be on the order of the experimental noise level/reconstruction uncertainty, around 10% in the case studies herein; in general the values and patterns of the matrices can also indicate aspects of the retrieval that worked well, or areas where values are poorly defined/recovered from the given dataset.

Show code cell content

# Define phase function to test unsigned phases only

def unsignedPhase(da):

"""Convert to unsigned phases."""

# Set mag, phase

mag = da.pipe(np.abs)

phase = da.pipe(np.angle) # Returns np array only!

# Set unsigned

magUS = mag.pipe(np.abs)

# phaseUS = phase.pipe(np.abs)

phaseUS = np.abs(phase)

# Set complex

compFixed = magUS * np.exp(1j* phaseUS)

# return mag,phase

return compFixed

Show code cell content

# Compute density matrices for retrieved and reference cases, and compare

# v2 - as v1, but differences for unsigned phase case & fix labels

# 26/07/22: messy but working. Some labelling tricks to push back into matPlot() routine

# Import routines

from epsproc.calc import density

# Compose density matrix

# Set dimensions/state vector/representation

# These must be in original data, but will be restacked as necessary to define the effective basis space.

denDims = ['LM', 'mu']

selDims = {'Type':'L'}

pTypes=['r','i']

thres = 1e-2 # 0.2 # Threshold out l>3 terms if using full 'orb5' set.

normME = False

normDen = 'max'

usPhase = True # Use unsigned phases?

# Calculate - Ref case

# matE = data.data['subset']['matE']

# Set data from master class

# k = 'orb5' # N2 orb5 (SG) dataset

k = 'subset'

matE = data.data[k]['matE']

if normME:

matE = matE/matE.max()

if usPhase:

matE = unsignedPhase(matE)

daOut, *_ = density.densityCalc(matE, denDims = denDims, selDims = selDims, thres = thres) # OK

if normDen=='max':

daOut = daOut/daOut.max()

elif normDen=='trace':

daOut = daOut/(daOut.sum('Sym').pipe(np.trace)**2) # Need sym sum here to get 2D trace

# daPlot = density.matPlot(daOut.sum('Sym'))

daPlot = density.matPlot(daOut.sum('Sym'), pTypes=pTypes)

# Retrieved

matE = data.data['agg']['matE']['compC']

selDims = {'Type':'compC'} # For stacked DS case need to set selDims again here to avoid null data selection below.

if normME:

matE = matE/matE.max()

if usPhase:

matE = unsignedPhase(matE)

daOut2, *_ = density.densityCalc(matE, denDims = denDims, selDims = selDims, thres = thres) # OK

if normDen=='max':

daOut2 = daOut2/daOut2.max()

elif normDen=='trace':

daOut2 = daOut2/(daOut2.sum('Sym').pipe(np.trace)**2)

daPlot2 = density.matPlot(daOut2.sum('Sym'), pTypes=pTypes) #.sel(Eke=slice(0.5,1.5,1)))

# Compute difference

if usPhase:

daDiff = unsignedPhase(daOut.sum('Sym')) - unsignedPhase(daOut2.sum('Sym'))

else:

daDiff = daOut.sum('Sym') - daOut2.sum('Sym')

daDiff.name = 'Difference'

daPlotDiff = density.matPlot(daDiff, pTypes=pTypes)

#******** Plot

daLayout = (daPlot.redim(pType='Component').layout('Component').relabel('(a) Reference density matrix (unsigned phases)') + daPlot2.opts(show_title=False).layout('pType').opts(show_title=True).relabel('(b) Reconstructed') +

daPlotDiff.opts(show_title=False).layout('pType').opts(show_title=True).relabel('(c) Difference')).cols(1)

daLayout.opts(ep.plot.hvPlotters.opts.HeatMap(width=300, frame_width=300, aspect='square', tools=['hover'], colorbar=True, cmap='coolwarm')) # .opts(show_title=False) # .opts(title="Custom Title") #OK

# Notes on titles... see https://holoviews.org/user_guide/Customizing_Plots.html

#

# .relabel('Test') and .opts(title="Custom Title") OK for whole row titles

#

# daPlot2.opts(show_title=False).layout('pType').opts(show_title=True).relabel('Recon') Turns off titles per plot, then titles layout

#

# .redim() to modify individual plot group label (from dimension name)

# Glue figure for later - real part only in this case

# Also clean up axis labels from default state labels ('LM' and 'LM_p' in this case).

glue("N2-densityComp", daLayout)

Set plot kdims to ['l,m,mu', 'l,m,mu_p']; pass kdims = [dim1,dim2] for more control.

Set plot kdims to ['l,m,mu', 'l,m,mu_p']; pass kdims = [dim1,dim2] for more control.

Set plot kdims to ['l,m,mu', 'l,m,mu_p']; pass kdims = [dim1,dim2] for more control.

WARNING:param.HeatMapPlot07822: Due to internal constraints, when aspect and width/height is set, the bokeh backend uses those values as frame_width/frame_height instead. This ensures the aspect is respected, but means that the plot might be slightly larger than anticipated. Set the frame_width/frame_height explicitly to suppress this warning.

WARNING:param.HeatMapPlot07830: Due to internal constraints, when aspect and width/height is set, the bokeh backend uses those values as frame_width/frame_height instead. This ensures the aspect is respected, but means that the plot might be slightly larger than anticipated. Set the frame_width/frame_height explicitly to suppress this warning.

WARNING:param.HeatMapPlot07859: Due to internal constraints, when aspect and width/height is set, the bokeh backend uses those values as frame_width/frame_height instead. This ensures the aspect is respected, but means that the plot might be slightly larger than anticipated. Set the frame_width/frame_height explicitly to suppress this warning.

WARNING:param.HeatMapPlot07866: Due to internal constraints, when aspect and width/height is set, the bokeh backend uses those values as frame_width/frame_height instead. This ensures the aspect is respected, but means that the plot might be slightly larger than anticipated. Set the frame_width/frame_height explicitly to suppress this warning.

WARNING:param.HeatMapPlot07894: Due to internal constraints, when aspect and width/height is set, the bokeh backend uses those values as frame_width/frame_height instead. This ensures the aspect is respected, but means that the plot might be slightly larger than anticipated. Set the frame_width/frame_height explicitly to suppress this warning.

WARNING:param.HeatMapPlot07901: Due to internal constraints, when aspect and width/height is set, the bokeh backend uses those values as frame_width/frame_height instead. This ensures the aspect is respected, but means that the plot might be slightly larger than anticipated. Set the frame_width/frame_height explicitly to suppress this warning.

Fig. 8.3 Density matrix comparison - rows show (a) reference case (with signs of phases removed), (b) reconstructed case, (c) differences. Columns are (left) imaginary component, (right) real component. If the reconstruction is good, the differences (fidelity) should be on the order of the experimental noise level/reconstruction uncertainty, around 10% in the case studies herein.#

8.8.2. Plot MF PADs#

Routines below adapted from the PEMtk documentation [20] MF reconstruction data processing page (original analysis page for Ref. [3], illustrating the \(N_2\) case). The routines include calls to self.mfpadNumeric() for numerical expansion of the MF-PADs, and self.padPlot() for plotting. Results are illustrated for the retrieved and reference cases in Fig. 8.4 and Fig. 8.5 respectively, and the differential results (reference minus fitted results) in Fig. 8.6.

Show code cell content

dataIn = data.data['agg']['matE'].copy()

# Restack for MFPAD calculation and plotter

# Single Eke dim case

# Create empty ePSbase class instance, and set data

# Can then use existing padPlot() routine for all data

from epsproc.classes.base import ePSbase

dataTest = ePSbase(verbose = 1)

aList = [i for i in dataIn.data_vars] # List of arrays

# Loop version & propagate attrs

dataType = 'matE'

for item in aList:

if item.startswith('sub'):

dataTest.data[item] = {dataType : dataIn[item]}

else:

selType = item

dataTest.data[item] = {dataType : dataIn[item].sel({'Type':selType})}

# Push singleton Eke value to dim for plotter

dataTest.data[item][dataType] = dataTest.data[item][dataType].expand_dims('Eke')

Show code cell content

# Compute MFPADs for a range of cases

# Set Euler angs to include diagonal pol case

pRot = [0, 0, np.pi/2, 0]

tRot = [0, np.pi/2, np.pi/2, np.pi/4]

cRot = [0, 0, 0, 0]

labels = ['z','x','y', 'd']

eulerAngs = np.array([labels, pRot, tRot, cRot]).T # List form to use later, rows per set of angles

# Should also use MFBLM function below instead of numeric version?

# Numeric version is handy for direct surface and difference case.

R = ep.setPolGeoms(eulerAngs = eulerAngs)

# Comparison and diff

pKey = [i for i in dataIn.data_vars if i!='comp'] # List of arrays

dataTest.mfpadNumeric(keys=pKey, R = R) # Compute MFPADs for each set of matrix elements using numerical routine

dataTest.data['diff'] = {'TX': dataTest.data['subset']['TX'].sum('Sym')-dataTest.data['compC']['TX'].sum('Sym')} # Add sum over sym to force matching dims

pKey.extend(['diff'])

# Plot - all cases

# Now run in separate cells below for more stable output

# Erange=[1,2,1] # Set for a range of Ekes

# Eplot = {'Eke':data.selOpts['matE']['inds']['Eke']} # Plot for selected Eke (as used for fitting)

# print(f"\n*** Plotting for keys = {pKey}, one per row ***\n") # Note plot labels could do with some work!

# dataTest.padPlot(keys=pKey, Erange=Erange, backend='pl',returnFlag=True, plotFlag=True) # Generate plotly polar surf plots for each dataset

# Change default plotting config, and then plot in separate cells below

ep.plot.hvPlotters.setPlotters(width=1000, height=500)

* Set Holoviews with bokeh.

Show code cell content

# Plot results from reconstructed matE

pKey = 'compC'

print(f"\n*** Plotting for keys = {pKey} ***\n") # Note plot labels could do with some work!

# dataTest.padPlot(keys=pKey, Erange=Erange, backend='pl',returnFlag=True, plotFlag=True) # Generate plotly polar surf plots for each dataset

Eplot = {'Eke':data.selOpts['matE']['inds']['Eke']} # Plot for selected Eke (as used for fitting)

dataTest.padPlot(keys=pKey, selDims=Eplot, backend='pl',returnFlag=True, plotFlag=True) # Generate plotly polar surf plots for each dataset

# And GLUE for display later with caption

figObj = dataTest.data[pKey]['plots']['TX']['polar'][0]

glue("N2-compC", figObj)

*** Plotting for keys = compC ***

Summing over dims: {'Sym'}

Plotting from self.data[compC][TX], facetDims=['Labels', None], pType=a2 with backend=pl.

*** WARNING: plot dataset has min value < 0, min = (-0.30055199647727293+0.013934080236283635j). This may be unphysical and/or result in plotting issues.

Set plot to self.data['compC']['plots']['TX']['polar']

Fig. 8.4 MF-PADs computed from retrieved matrix elements for \((x,y,z,d)\) polarization geometries, where \(d\) is the “diagonal” case with the polarization axis as 45 degrees to the \(z\)-axis.#

Show code cell content

# Plot results from reference matE

pKey = 'subset'

print(f"\n*** Plotting for keys = {pKey} ***\n") # Note plot labels could do with some work!

# dataTest.padPlot(keys=pKey, Erange=Erange, backend='pl',returnFlag=True, plotFlag=True) # Generate plotly polar surf plots for each dataset

Eplot = {'Eke':data.selOpts['matE']['inds']['Eke']} # Plot for selected Eke (as used for fitting)

dataTest.padPlot(keys=pKey, selDims=Eplot, backend='pl',returnFlag=True, plotFlag=True) # Generate plotly polar surf plots for each dataset

# And GLUE for display later with caption

figObj = dataTest.data[pKey]['plots']['TX']['polar'][0]

glue("N2-ref", figObj)

*** Plotting for keys = subset ***

Summing over dims: {'Sym'}

Plotting from self.data[subset][TX], facetDims=['Labels', None], pType=a2 with backend=pl.

*** WARNING: plot dataset has min value < 0, min = (-0.994014724613836+0.6180516033545451j). This may be unphysical and/or result in plotting issues.

Set plot to self.data['subset']['plots']['TX']['polar']

Fig. 8.5 MF-PADs computed from reference ab initio matrix elements for \((x,y,z,d)\) polarization geometries, where \(d\) is the “diagonal” case with the polarization axis as 45 degrees to the \(z\)-axis.#

Show code cell content

# Plot normalised differences

pKey = 'diff'

print(f"\n*** Plotting for keys = {pKey} ***\n") # Note plot labels could do with some work!

# dataTest.padPlot(keys=pKey, Erange=Erange, backend='pl',returnFlag=True, plotFlag=True) # Generate plotly polar surf plots for each dataset

Eplot = {'Eke':data.selOpts['matE']['inds']['Eke']} # Plot for selected Eke (as used for fitting)

dataTest.padPlot(keys=pKey, selDims=Eplot, backend='pl',returnFlag=True, plotFlag=True) # Generate plotly polar surf plots for each dataset

# And GLUE for display later with caption

figObj = dataTest.data[pKey]['plots']['TX']['polar'][0]

glue("N2-diff", figObj)

*** Plotting for keys = diff ***

Summing over dims: set()

Plotting from self.data[diff][TX], facetDims=['Labels', None], pType=a2 with backend=pl.

*** WARNING: plot dataset has min value < 0, min = (-0.7315171028213685+0.3781422641976339j). This may be unphysical and/or result in plotting issues.

Set plot to self.data['diff']['plots']['TX']['polar']

Fig. 8.6 MF-PADs differences between retrieved and reference cases for \((x,y,z,d)\) polarization geometries, where \(d\) is the “diagonal” case with the polarization axis as 45 degrees to the \(z\)-axis. Note diffs are normalised to emphasize the shape, but not mangnitudes, of the differences - see the density matrix comparisons for a more rigourous fidelity analysis.#

Show code cell content

# Check max differences (abs values)

maxDiff = dataTest.data['diff']['plots']['TX']['pData'].max(dim=['Theta','Phi']) #.sum(['Theta','Phi']).max() #.max(dim='Eke')

maxDiff.to_pandas()

Labels

z 0.681

x 0.783

y 0.783

d 0.858

dtype: float64

Show code cell content

# Check case without phase correction too - this should indicate poor agreement in general

pKey = 'comp'

dataTest.mfpadNumeric(keys=pKey, R = R)

dataTest.padPlot(keys=pKey, selDims=Eplot, backend='pl',returnFlag=True, plotFlag=True) # Generate plotly polar surf plots for each dataset

Summing over dims: {'Sym'}

Plotting from self.data[comp][TX], facetDims=['Labels', None], pType=a2 with backend=pl.

*** WARNING: plot dataset has min value < 0, min = (-0.9614448805221587+0.9474499507819027j). This may be unphysical and/or result in plotting issues.

Set plot to self.data['comp']['plots']['TX']['polar']