3.7. Information content & sensitivity#

A useful tool in considering the possibility of matrix element retrieval is the response, or sensitivity, of the experimental observables to the matrix elements of interest. Aspects of this have already been explored in Sect. 3.3, where consideration of the various geometric tensors (or geometric basis set) provided a route to investigating the coupling - hence sensitivity - of various parameters into product terms. In particular the tensor products discussed in Sect. 3.3.9, including the full channel (response) functions \(\varUpsilon_{L,M}^{u,\zeta\zeta'}\) ((3.18) and (3.19)), can be used to examine the overall sensitivity of a given measurement to the underlying observables. By careful consideration of the problem at hand, experiments may then be tailored for particular cases based on these sensitivities. A related question, is how a given experimental sensitivity might be more readily quantified, and interpreted, for reconstruction problems, in a simpler manner. In general, this can be termed as the information content of the measurement(s); an important aspect of such a metric is that it should be readily interpretable and, ideally, related to whether a reconstruction will be possible in a given case (this has, for example, been considered by other authors for specific cases, e.g. Refs. [113, 124]).

Work in this direction is ongoing, and some thoughts are given below. In particular, the use of the observable \(\beta_{L,M}\) presents an experimental route to (roughly) define a form of information content, whilst metrics derived from channel functions or density matrices may present a more rigorous theoretical route to a useful parameterization of information content.

3.7.1. Numerical setup#

This follows the setup in Sect. 3.3 Tensor formulation of photoionization, using a symmetry-based set of basis functions for demonstration purposes. (Repeated code is hidden in PDF version.)

Show code cell content

# Run default config - may need to set full path here

%run '../scripts/setup_notebook.py'

# Override plotters backend?

# plotBackend = 'pl'

*** Setting up notebook with standard Quantum Metrology Vol. 3 imports...

For more details see https://pemtk.readthedocs.io/en/latest/fitting/PEMtk_fitting_basic_demo_030621-full.html

To use local source code, pass the parent path to this script at run time, e.g. "setup_fit_demo ~/github"

*** Running: 2023-12-07 10:43:36

Working dir: /home/jovyan/jake-home/buildTmp/_latest_build/html/doc-source/part1

Build env: html

None

* Loading packages...

* sparse not found, sparse matrix forms not available.

* natsort not found, some sorting functions not available.

* Setting plotter defaults with epsproc.basicPlotters.setPlotters(). Run directly to modify, or change options in local env.

* Set Holoviews with bokeh.

* pyevtk not found, VTK export not available.

* Set Holoviews with bokeh.

Jupyter Book : 0.15.1

External ToC : 0.3.1

MyST-Parser : 0.18.1

MyST-NB : 0.17.2

Sphinx Book Theme : 1.0.1

Jupyter-Cache : 0.6.1

NbClient : 0.7.4

Show code cell content

# Setup symmetry-defined matrix elements using PEMtk

%run '../scripts/setup_symmetry_basis_tensors.py'

*** Setting up basis set for symmetry-defined matrix elements, see Quantum Metrology Vol. 3 Sect. 3.3...

Set symmetry=D2h, lmax=4

*** Mapping coeffs to ePSproc dataType = matE

Remapped dims: {'C': 'Cont', 'mu': 'it'}

Added dim Eke

Added dim Targ

Added dim Total

Added dim mu

Added dim Type

Found dipole symmetries:

{'B1u': {'m': [0], 'pol': ['z']}, 'B2u': {'m': [-1, 1], 'pol': ['y']}, 'B3u': {'m': [-1, 1], 'pol': ['x']}}

*** Mapping coeffs to ePSproc dataType = BLM

Remapped dims: {'C': 'Cont', 'mu': 'muX'}

Added dim Eke

Added dim P

Added dim T

Added dim C

*** Mapping coeffs to ePSproc dataType = matE

Remapped dims: {'C': 'Cont', 'mu': 'muX'}

Added dim Eke

Added dim Targ

Added dim Total

Added dim mu

Added dim it

Added dim Type

*** Assigning matrix elements and computing betas...

Set channels neutral sym=A1g, ion sym=A1g

*** Updated self.coeffs['matE'] with new coords.

Assigned 'Total' from A1g x A1g = ['A1g']

Assigned 'Total' from A1u x A1g = ['A1u']

Assigned 'Total' from B1g x A1g = ['B1g']

Assigned 'Total' from B1u x A1g = ['B1u']

Assigned 'Total' from B2g x A1g = ['B2g']

Assigned 'Total' from B2u x A1g = ['B2u']

Assigned 'Total' from B3g x A1g = ['B3g']

Assigned 'Total' from B3u x A1g = ['B3u']

*** Updated self.coeffs['matE'] with new coords.

Assigned dipole-allowed terms for dim = 'Cont' to self.coeffs['symAllowed']

Product basis elements: dict_keys(['BLMtableResort', 'polProd', 'phaseConvention', 'BLMRenorm'])

Full basis elements: dict_keys(['QNs', 'EPRX', 'lambdaTerm', 'BLMtable', 'BLMtableResort', 'AFterm', 'AKQS', 'polProd', 'phaseConvention', 'BLMRenorm', 'matEmult'])

*** Setting trial results for linear ramp ADMs.

Subselected from dataset 'ADM', dataType 'ADM': 50 from 50 points (100.00%)

Computing BLMs for linear ramp case...

| allowed | m | pol | result | terms | ||

|---|---|---|---|---|---|---|

| Dipole | Target | |||||

| B1u | A1g | False | [0] | ['z'] | ['B1u'] | ['A1g', 'A1g'] |

| B1g | False | [0] | ['z'] | ['A1u'] | ['A1g', 'A1g'] | |

| A1u | False | [0] | ['z'] | ['B1g'] | ['A1g', 'A1g'] | |

| B1u | True | [0] | ['z'] | ['A1g'] | ['A1g', 'A1g'] | |

| B3g | False | [0] | ['z'] | ['B2u'] | ['A1g', 'A1g'] | |

| B3u | False | [0] | ['z'] | ['B2g'] | ['A1g', 'A1g'] | |

| B2g | False | [0] | ['z'] | ['B3u'] | ['A1g', 'A1g'] | |

| B2u | False | [0] | ['z'] | ['B3g'] | ['A1g', 'A1g'] | |

| B2u | A1g | False | [-1, 1] | ['y'] | ['B2u'] | ['A1g', 'A1g'] |

| B1g | False | [-1, 1] | ['y'] | ['B3u'] | ['A1g', 'A1g'] | |

| A1u | False | [-1, 1] | ['y'] | ['B2g'] | ['A1g', 'A1g'] | |

| B1u | False | [-1, 1] | ['y'] | ['B3g'] | ['A1g', 'A1g'] | |

| B3g | False | [-1, 1] | ['y'] | ['B1u'] | ['A1g', 'A1g'] | |

| B3u | False | [-1, 1] | ['y'] | ['B1g'] | ['A1g', 'A1g'] | |

| B2g | False | [-1, 1] | ['y'] | ['A1u'] | ['A1g', 'A1g'] | |

| B2u | True | [-1, 1] | ['y'] | ['A1g'] | ['A1g', 'A1g'] | |

| B3u | A1g | False | [-1, 1] | ['x'] | ['B3u'] | ['A1g', 'A1g'] |

| B1g | False | [-1, 1] | ['x'] | ['B2u'] | ['A1g', 'A1g'] | |

| A1u | False | [-1, 1] | ['x'] | ['B3g'] | ['A1g', 'A1g'] | |

| B1u | False | [-1, 1] | ['x'] | ['B2g'] | ['A1g', 'A1g'] | |

| B3g | False | [-1, 1] | ['x'] | ['A1u'] | ['A1g', 'A1g'] | |

| B3u | True | [-1, 1] | ['x'] | ['A1g'] | ['A1g', 'A1g'] | |

| B2g | False | [-1, 1] | ['x'] | ['B1u'] | ['A1g', 'A1g'] | |

| B2u | False | [-1, 1] | ['x'] | ['B1g'] | ['A1g', 'A1g'] |

| Cont | B1u | B2u | B3u | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Eke | Targ | Total | Type | h | it | l | m | mu | muX | |||

| 0 | A1g | B1u | U | 0 | NaN | 1 | 0 | 0 | 0 | (1+0j) | ||

| 1 | NaN | 3 | 0 | 0 | 0 | (1+0j) | ||||||

| 2 | NaN | 3 | -2 | 0 | 0 | (0.7071067811865475+0j) | ||||||

| 2 | 0 | 0 | (0.7071067811865475+0j) | |||||||||

| B2u | U | 0 | NaN | 1 | -1 | -1 | 0 | (-0.7071067811865475+0j) | ||||

| 1 | 0 | (-0.7071067811865475+0j) | ||||||||||

| 1 | -1 | 0 | (-0.7071067811865475-0j) | |||||||||

| 1 | 0 | (-0.7071067811865475-0j) | ||||||||||

| 1 | NaN | 3 | -3 | -1 | 0 | (-0.7071067811865475+0j) | ||||||

| 1 | 0 | (-0.7071067811865475+0j) | ||||||||||

| 3 | -1 | 0 | (-0.7071067811865475-0j) | |||||||||

| 1 | 0 | (-0.7071067811865475-0j) | ||||||||||

| 2 | NaN | 3 | -1 | -1 | 0 | (-0.7071067811865475+0j) | ||||||

| 1 | 0 | (-0.7071067811865475+0j) | ||||||||||

| 1 | -1 | 0 | (-0.7071067811865475-0j) | |||||||||

| 1 | 0 | (-0.7071067811865475-0j) | ||||||||||

| B3u | U | 0 | NaN | 1 | -1 | -1 | 0 | (0.7071067811865475+0j) | ||||

| 1 | 0 | (0.7071067811865475+0j) | ||||||||||

| 1 | -1 | 0 | (-0.7071067811865475-0j) | |||||||||

| 1 | 0 | (-0.7071067811865475-0j) | ||||||||||

| 1 | NaN | 3 | -1 | -1 | 0 | (0.7071067811865475+0j) | ||||||

| 1 | 0 | (0.7071067811865475+0j) | ||||||||||

| 1 | -1 | 0 | (-0.7071067811865475-0j) | |||||||||

| 1 | 0 | (-0.7071067811865475-0j) | ||||||||||

| 2 | NaN | 3 | -3 | -1 | 0 | (0.7071067811865475+0j) | ||||||

| 1 | 0 | (0.7071067811865475+0j) | ||||||||||

| 3 | -1 | 0 | (-0.7071067811865475-0j) | |||||||||

| 1 | 0 | (-0.7071067811865475-0j) |

Show code cell content

%matplotlib inline

# May need this in some build envs.

3.7.2. Experimental information content#

As discussed in Quantum Metrology Vol. 2 [9], the information content of a single observable might be regarded as simply the number of contributing \(\beta_{L,M}\) parameters. In set notation:

where \(M\) is the information content of the measurement, defined as \(\mathrm{n}\{...\}\) the cardinality (number of elements) of the set of contributing parameters. A set of measurements, made for some experimental variable \(u\), will then have a total information content:

In the case where a single measurement contains multiple \(\beta_{L,M}\), e.g. as a function of energy \(\epsilon\) or time \(t\), the information content will naturally be larger:

where the second line pertains if each measurement has the same native information content, independent of \(u\). It may be that the variable \(\epsilon\) is continuous (e.g. photoelectron energy), but in practice it will usually be discretized in some fashion by the measurement.

In terms of purely experimental methodologies, a larger \(M_{u}\) clearly defines a richer experimental measurement which explores more of the total measurement space spanned by the full set of \(\{\beta_{L,M}^{u}(\epsilon,t)\}\). However, in this basic definition a larger \(M_{u}\) does not necessarily indicate a higher information content for quantum retrieval applications. The reason for this is simply down to the complexity of the problem (cf. Eq. (3.13)), in which many couplings define the sensitivity of the observable to the underlying system properties of interest. In this sense, more measurements, and larger \(M\), may only add redundancy, rather than new information.

From a set of numerical results, it is relatively trivial to investigate some of these properties as a function of various constraints, using standard Python functionality, as shown in the code blocks below. For example, \(M\) can be determined numerically as the number of elements in the dataset, the number of unique elements, the number of elements within a certain range or above a threshold, and so on.

# For the basic case, the data (Xarray object) can be queried,

# and relevant dimensions investigated

print(f"Available dimensions: {BetaNorm.dims}")

# Show BLM dimension details from Xarray dataset

display(BetaNorm.BLM)

Available dimensions: ('Labels', 't', 'Type', 'it', 'Eke', 'h', 'muX', 'BLM')

<xarray.DataArray 'BLM' (BLM: 9)>

array([(0, -1), (0, 0), (0, 1), (1, -1), (1, 0), (1, 1), (2, -1), (2, 0),

(2, 1)], dtype=object)

Coordinates:

* BLM (BLM) MultiIndex

- l (BLM) int64 0 0 0 1 1 1 2 2 2

- m (BLM) int64 -1 0 1 -1 0 1 -1 0 1# Note, however, that the indexes may not always be physical,

# depending on how the data has been composed and cleaned up.

# For example, the above has l=0, m=+/-1 cases, which are non-physical.

# Clean array to remove terms |m|>l, and display

cleanBLMs(BetaNorm).BLM

<xarray.DataArray 'BLM' (BLM: 7)> array([(0, 0), (1, -1), (1, 0), (1, 1), (2, -1), (2, 0), (2, 1)], dtype=object) Coordinates: * BLM (BLM) MultiIndex - l (BLM) int64 0 1 1 1 2 2 2 - m (BLM) int64 0 -1 0 1 -1 0 1

Show code cell content

# Clean array to remove terms |m|>l, and display - Xarray native version

# BetaNorm.BLM.where(np.abs(BetaNorm.BLM.m)<=BetaNorm.BLM.l,drop=True)

# BetaNorm.where(np.abs(BetaNorm.m)<=BetaNorm.l,drop=True)

# Thresholding can also be used to reduce the results

ep.matEleSelector(BetaNorm, thres=1e-4).BLM

<xarray.DataArray 'BLM' (BLM: 2)> array([(0, 0), (2, 0)], dtype=object) Coordinates: * BLM (BLM) MultiIndex - l (BLM) int64 0 2 - m (BLM) int64 0 0

# The index can be returned as a Pandas object, and statistical routines applied...

# For example, nunique() will provide the number of unique values.

thres=1e-4

print(f"Original array M={BetaNorm.BLM.indexes['BLM'].nunique()}")

print(f"Cleaned array M={cleanBLMs(BetaNorm).BLM.size}")

print(f"Thresholded array (thres={thres}), \

M={ep.matEleSelector(BetaNorm, thres=thres).BLM.indexes['BLM'].nunique()}")

Original array M=9

Cleaned array M=7

Thresholded array (thres=0.0001), M=2

For more complicated cases, with \(u>1\), e.g. time-dependent measurements, interrogating the statistics of the observables may also be an interesting avenue to explore. The examples below investigate this for the example “linear ramp” ADMs case. Here the statistical analysis is, potentially, a measure of the useful/non-redundant information content, for instance the range or variance in a particular observable can be analysed, as can the number of unique values and so forth.

Show code cell content

BetaNorm

<xarray.DataArray (Labels: 1, t: 1, Type: 1, it: 1, Eke: 1, h: 6, muX: 1, BLM: 9)>

array([[[[[[[[ 0.00000000e+00+0.j, -2.82094792e-01+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 6.93889390e-18+0.j,

0.00000000e+00+0.j]],

[[ 0.00000000e+00+0.j, -2.82094792e-01+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 4.48556893e-02+0.j,

0.00000000e+00+0.j]],

[[ 0.00000000e+00+0.j, -2.82094792e-01+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, -4.48556893e-02+0.j,

0.00000000e+00+0.j]],

[[ 0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j]],

[[ 0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j]],

[[ 0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j, 0.00000000e+00+0.j,

0.00000000e+00+0.j]]]]]]]])

Coordinates:

Euler (Labels) object (0.0, 0.0, 0.0)

* Labels (Labels) <U1 'A'

* t (t) int64 0

* Type (Type) <U1 'U'

* it (it) float64 nan

* Eke (Eke) int64 0

* h (h) int64 0 1 2 3 4 5

* muX (muX) int64 0

XSraw (Labels, t, Type, it, Eke, h, muX) complex128 (-0.28209479177...

XSrescaled (Labels, t, Type, it, Eke, h, muX) complex128 (-1.00000000000...

XSiso (Type, it, Eke, h, muX) complex128 (1.6666666666666663+0j) .....

* BLM (BLM) MultiIndex

- l (BLM) int64 0 0 0 1 1 1 2 2 2

- m (BLM) int64 -1 0 1 -1 0 1 -1 0 1

Attributes:

QNs: None

AKQS: None

EPRX: None

p: [0]

BLMtable: None

BLMtableResort: None

lambdaTerm: None

polProd: None

AFterm: None

thres: None

thresDims: Eke

selDims: {}

sqThres: False

dropThres: True

sumDims: ['mu', 'mup', 'l', 'lp', 'm', 'mp', 'S-Rp']

sumDimsPol: ['P', 'R', 'Rp', 'p']

symSum: True

outputDims: {'LM': ['L', 'M']}

degenDrop: True

SFflag: False

SFflagRenorm: False

BLMRenorm: 0

squeeze: False

phaseConvention: S

basisReturn: ProductBasis

verbose: 0

kwargs: {'RX': None}

matEleSelector: <function matEleSelector at 0x7f6660b79120>

degenDict: {'it': <xarray.DataArray 'it' (it: 1)>\narray([nan])\nC...

dataType: BLM# Convert to PD and tabulate with epsproc functionality

# Note restack along 't' dimension

BetaNormLinearADMsPD, _ = ep.util.multiDimXrToPD(BetaNormLinearADMs.squeeze().real,

thres=1e-4, colDims='t')

# Basic describe with Pandas,

# see https://pandas.pydata.org/docs/user_guide/basics.html#summarizing-data-describe

# This will give properties per t

BetaNormLinearADMsPD.describe()

| t | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| count | 5.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 |

| mean | -0.169 | -0.074 | -0.063 | -0.052 | -0.041 | -0.031 | -0.020 | -0.009 | 0.002 | 0.012 |

| std | 0.158 | 0.126 | 0.115 | 0.104 | 0.093 | 0.083 | 0.073 | 0.065 | 0.058 | 0.053 |

| min | -0.282 | -0.254 | -0.226 | -0.198 | -0.170 | -0.142 | -0.114 | -0.086 | -0.058 | -0.038 |

| 25% | -0.282 | -0.201 | -0.179 | -0.157 | -0.135 | -0.113 | -0.092 | -0.072 | -0.052 | -0.030 |

| 50% | -0.282 | -0.002 | -0.004 | -0.006 | -0.008 | -0.010 | -0.011 | -0.009 | -0.007 | -0.005 |

| 75% | -0.045 | 0.004 | 0.008 | 0.011 | 0.015 | 0.019 | 0.023 | 0.027 | 0.031 | 0.034 |

| max | 0.045 | 0.050 | 0.054 | 0.059 | 0.064 | 0.069 | 0.075 | 0.088 | 0.100 | 0.113 |

# Basic describe with Pandas,

# see https://pandas.pydata.org/docs/user_guide/basics.html#summarizing-data-describe

# By transposing the input array, this will give properties per BLM

BetaNormLinearADMsPD.T.describe()

| h | 0 | 1 | 2 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| l | 0 | 2 | 0 | 2 | 4 | 6 | 0 | 2 | 4 | 6 |

| m | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| count | 10.000 | 9.000 | 10.000 | 10.000 | 9.000 | 9.000 | 10.000 | 10.000 | 9.000 | 9.000e+00 |

| mean | -0.156 | 0.063 | -0.156 | 0.066 | 0.021 | 0.012 | -0.156 | -0.029 | -0.021 | 1.669e-03 |

| std | 0.085 | 0.034 | 0.085 | 0.014 | 0.012 | 0.007 | 0.085 | 0.011 | 0.011 | 9.140e-04 |

| min | -0.282 | 0.013 | -0.282 | 0.045 | 0.004 | 0.002 | -0.282 | -0.045 | -0.038 | 3.337e-04 |

| 25% | -0.219 | 0.038 | -0.219 | 0.056 | 0.013 | 0.007 | -0.219 | -0.037 | -0.029 | 1.001e-03 |

| 50% | -0.156 | 0.063 | -0.156 | 0.066 | 0.021 | 0.012 | -0.156 | -0.029 | -0.021 | 1.669e-03 |

| 75% | -0.093 | 0.088 | -0.093 | 0.077 | 0.030 | 0.017 | -0.093 | -0.021 | -0.013 | 2.336e-03 |

| max | -0.030 | 0.113 | -0.030 | 0.088 | 0.038 | 0.022 | -0.030 | -0.013 | -0.004 | 3.004e-03 |

For further insight and control, specific aggregation functions and criteria can be specified. For instance, it may be interesting to look at the number of unique values to a certain precision (e.g. depending on experimental uncertainties), or consider deviation of values from the mean.

# Round values to 2 d.p., then apply statistical methods

ndp = 2

BetaNormLinearADMsPD.round(ndp).agg(['min','max','var','count','nunique']).round(3)

| t | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| min | -0.280 | -0.250 | -0.230 | -0.200 | -0.170 | -0.140 | -0.110 | -0.090 | -0.060 | -0.040 |

| max | 0.040 | 0.050 | 0.050 | 0.060 | 0.060 | 0.070 | 0.080 | 0.090 | 0.100 | 0.110 |

| var | 0.024 | 0.015 | 0.014 | 0.011 | 0.009 | 0.007 | 0.005 | 0.004 | 0.003 | 0.003 |

| count | 5.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 | 10.000 |

| nunique | 3.000 | 5.000 | 7.000 | 7.000 | 8.000 | 8.000 | 8.000 | 8.000 | 8.000 | 8.000 |

# Define demean function and apply (from https://stackoverflow.com/a/26110278)

demean = lambda x: x - x.mean()

# Compute differences from mean

BetaNormLinearADMsPD.transform(demean,axis='columns')

| t | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| h | l | m | ||||||||||

| 0 | 0 | 0 | -0.126 | -0.098 | -0.070 | -4.205e-02 | -1.402e-02 | 1.402e-02 | 4.205e-02 | 7.009e-02 | 0.098 | 0.126 |

| 2 | 0 | NaN | -0.050 | -0.038 | -2.508e-02 | -1.254e-02 | 0.000e+00 | 1.254e-02 | 2.508e-02 | 0.038 | 0.050 | |

| 1 | 0 | 0 | -0.126 | -0.098 | -0.070 | -4.205e-02 | -1.402e-02 | 1.402e-02 | 4.205e-02 | 7.009e-02 | 0.098 | 0.126 |

| 2 | 0 | -0.022 | -0.017 | -0.012 | -7.172e-03 | -2.391e-03 | 2.391e-03 | 7.172e-03 | 1.195e-02 | 0.017 | 0.022 | |

| 4 | 0 | NaN | -0.017 | -0.013 | -8.537e-03 | -4.269e-03 | 3.469e-18 | 4.269e-03 | 8.537e-03 | 0.013 | 0.017 | |

| 6 | 0 | NaN | -0.010 | -0.007 | -4.912e-03 | -2.456e-03 | -3.469e-18 | 2.456e-03 | 4.912e-03 | 0.007 | 0.010 | |

| 2 | 0 | 0 | -0.126 | -0.098 | -0.070 | -4.205e-02 | -1.402e-02 | 1.402e-02 | 4.205e-02 | 7.009e-02 | 0.098 | 0.126 |

| 2 | 0 | -0.016 | -0.013 | -0.009 | -5.366e-03 | -1.789e-03 | 1.789e-03 | 5.366e-03 | 8.943e-03 | 0.013 | 0.016 | |

| 4 | 0 | NaN | 0.017 | 0.013 | 8.364e-03 | 4.182e-03 | 0.000e+00 | -4.182e-03 | -8.364e-03 | -0.013 | -0.017 | |

| 6 | 0 | NaN | -0.001 | -0.001 | -6.675e-04 | -3.337e-04 | -1.735e-18 | 3.337e-04 | 6.675e-04 | 0.001 | 0.001 |

# Apply statistical functions to differences from mean.

BetaNormLinearADMsPD.transform(demean,axis='columns'). \

round(ndp).agg(['min','max','var','count','nunique']).round(3)

| t | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| min | -0.130 | -0.100 | -0.070 | -0.04 | -0.01 | -0.00 | 0.00 | -0.010 | -0.010 | -0.020 |

| max | -0.020 | 0.020 | 0.010 | 0.01 | -0.00 | 0.01 | 0.04 | 0.070 | 0.100 | 0.130 |

| var | 0.004 | 0.002 | 0.001 | 0.00 | 0.00 | 0.00 | 0.00 | 0.001 | 0.002 | 0.003 |

| count | 5.000 | 10.000 | 10.000 | 10.00 | 10.00 | 10.00 | 10.00 | 10.000 | 10.000 | 10.000 |

| nunique | 2.000 | 6.000 | 5.000 | 5.00 | 2.00 | 2.00 | 3.00 | 5.000 | 6.000 | 6.000 |

In this case the analysis suggests that \(t=3 - 6\) contain minimal information (low variance), and \(t=4,5\) potentially redundant information (low nunique), whilst \(t=1,7 - 9\) show a greater total information content and number of unique values. However, this analysis is not necessarily absolutely definitive, since some nuances may be lost in this basic statistical analysis, particularly for weaker channels.

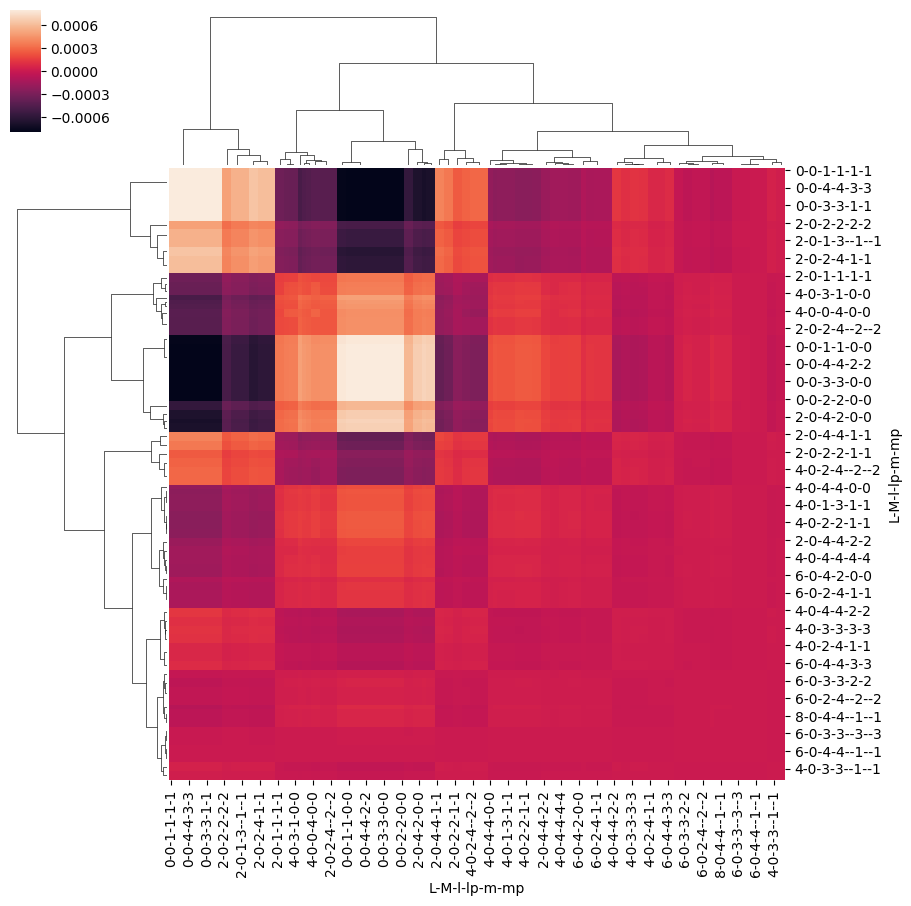

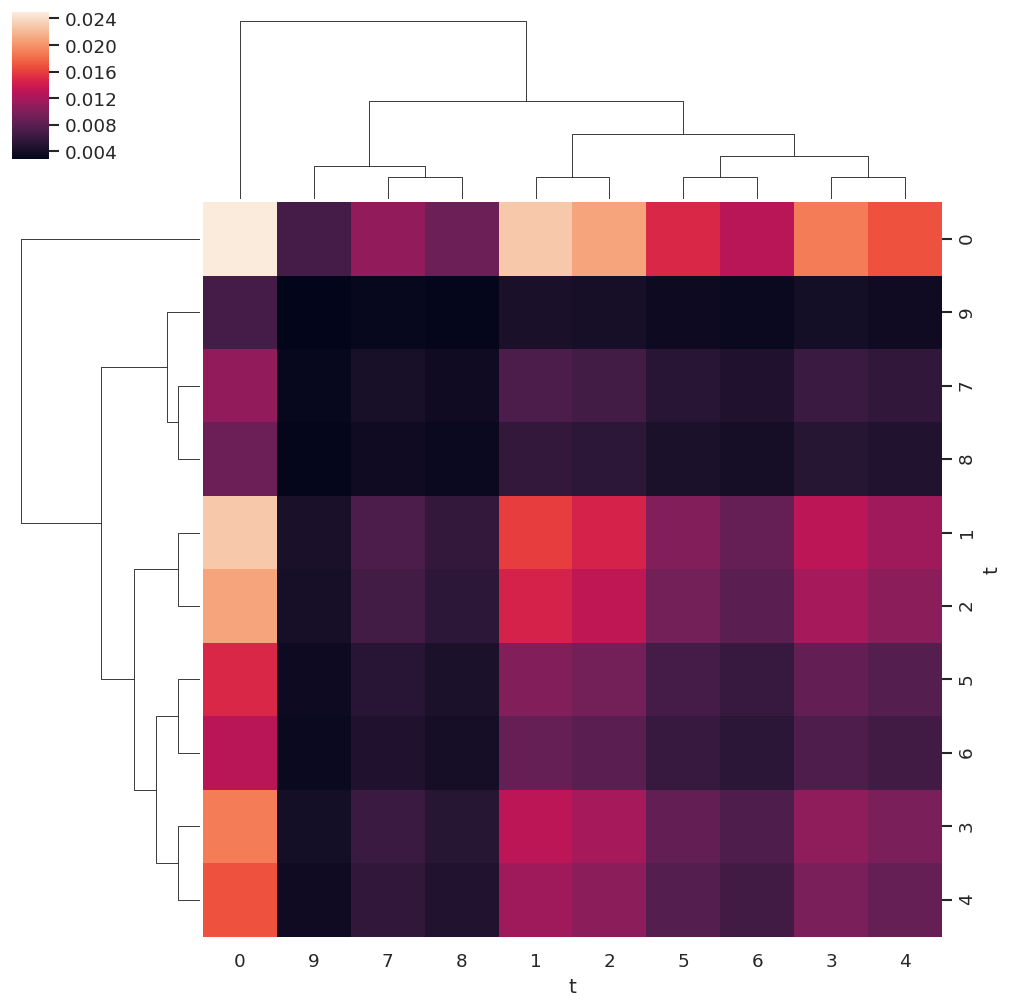

For a more detailed analysis, other standard analysis tools can be deployed. For instance, the covariance matrix can be investigated, given by \(K_{i,j}=\textrm{cov}[X_{i},X_{j}]=\langle(X_{i}-\langle X_{i}\rangle)(X_{j}-\langle X_{j}\rangle)\rangle\). For the linear ramp case this analysis is shown below and, although not particularly useful in this example, will become more informative for more complicated cases.

# Compute covariance matrix with Pandas

# Note this is the pairwise covariance of the columns,

# see https://pandas.pydata.org/pandas-docs/stable/reference/api/pandas.DataFrame.cov.html

covMat = BetaNormLinearADMsPD.cov()

# Plot with holoviews

figObj = covMat.hvplot.heatmap(cmap='viridis')

Show code cell content

# Glue figure

glue("covMatBLMExample", figObj) #covMat.hvplot.heatmap(cmap='viridis'))

WARNING:param.HeatMapPlot03020: Due to internal constraints, when aspect and width/height is set, the bokeh backend uses those values as frame_width/frame_height instead. This ensures the aspect is respected, but means that the plot might be slightly larger than anticipated. Set the frame_width/frame_height explicitly to suppress this warning.

Fig. 3.22 Example \(\beta_{L,M}(t)\) covariance matrix, see text for details.#

Show code cell content

# Seaborn also has nice cluster plotting routines, which include sorting by similarity

import seaborn as sns

sns.clustermap(covMat)

<seaborn.matrix.ClusterGrid at 0x7f68bfb12410>

3.7.3. Information content from channel functions#

A more complete accounting of information content would, therefore, also include the channel couplings, i.e. sensitivity/dependence of the observable to a given system property, in some manner. For the case of a time-dependent measurement, arising from a rotational wavepacket, this can be written as:

In this case, each \((\epsilon,t)\) is treated as an independent measurement with unique information content, although there may be redundancy as a function of \(t\) depending on the nature of the rotational wavepacket and channel functions.

(Note this is in distinction to previously demonstrated cases where the time-dependence was created from a shaped laser-field, and was integrated over in the measurements, which provided a coherently-multiplexed case, see refs. [99, 125, 126] for details.)

In the numerical examples below, this is considered in terms of the full channel (response) functions \(\varUpsilon_{L,M}^{u,\zeta\zeta'}\) as defined in (3.18) and (3.19) (see Sect. 3.3.9). Numerically, the routines follow from those already introduced above for exploring the information content of \(\beta_{L,M}\) terms, with the caveat that there are more dimensions to handle in the channel functions, indexed by the relevant set of quantum numbers \(\{\zeta,\zeta'\}\) - these can be included in the criteria for determination of \(M\), or selected or summed over as desired.

# Define a set of channel functions to test

channelFuncs = (basisProductLinearADMs['BLMtableResort'] * basisProductLinearADMs['polProd'])

# For illustrative purposes, define a subset to use for analysis

channelFuncsSubset = channelFuncs.sel(Labels='A').sel({'S-Rp':0,'mu':0,'mup':0}) #.sel(L=2)

# Check dimensions

print(f"Available dimensions: {channelFuncs.dims}")

print(f"Subset dimensions: {channelFuncsSubset.dims}")

Available dimensions: ('m', 'mp', 'S-Rp', 'l', 'lp', 'L', 'mu', 'mup', 'Labels', 'M', 't')

Subset dimensions: ('m', 'mp', 'l', 'lp', 'L', 'M', 't')

# Convert to PD and tabulate with epsproc functionality

# Note restack along 't' dimension

channelFuncsSubsetPD, _ = ep.util.multiDimXrToPD(channelFuncsSubset.squeeze().real,

thres=1e-4, colDims='t')

# Round values to 1 d.p., then apply statistical methods

# Compute per basis index and display

channelFuncsSubsetPD.T.round(2).agg(['min','max','var','count','nunique']).T

Show code cell output

| min | max | var | count | nunique | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| L | M | l | lp | m | mp | |||||

| 0 | 0 | 0 | 0 | 0 | 0 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 |

| 1 | 1 | 1 | 1 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||

| 0 | 0 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| -1 | -1 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| 2 | 2 | 2 | 2 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||

| 1 | 1 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| 0 | 0 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| -1 | -1 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| -2 | -2 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| 3 | 3 | 3 | 3 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||

| 2 | 2 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| 1 | 1 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| 0 | 0 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| -1 | -1 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| -2 | -2 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| -3 | -3 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| 4 | 4 | 4 | 4 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||

| 3 | 3 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| 2 | 2 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| 1 | 1 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| 0 | 0 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| -1 | -1 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| -2 | -2 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| -3 | -3 | -0.18 | -0.09 | 9.167e-04 | 10.0 | 10.0 | ||||

| -4 | -4 | 0.09 | 0.18 | 9.167e-04 | 10.0 | 10.0 | ||||

| 2 | 0 | 0 | 2 | 0 | 0 | 0.04 | 0.12 | 6.767e-04 | 10.0 | 9.0 |

| 1 | 1 | 1 | 1 | 0.02 | 0.05 | 1.611e-04 | 10.0 | 4.0 | ||

| 0 | 0 | 0.03 | 0.11 | 6.667e-04 | 10.0 | 9.0 | ||||

| -1 | -1 | 0.02 | 0.05 | 1.611e-04 | 10.0 | 4.0 | ||||

| 3 | 1 | 1 | -0.09 | -0.03 | 4.233e-04 | 10.0 | 7.0 | |||

| 0 | 0 | 0.03 | 0.11 | 6.667e-04 | 10.0 | 9.0 | ||||

| -1 | -1 | -0.09 | -0.03 | 4.233e-04 | 10.0 | 7.0 | ||||

| 2 | 0 | 0 | 0 | 0.04 | 0.12 | 6.767e-04 | 10.0 | 9.0 | ||

| 2 | 2 | 2 | -0.08 | -0.02 | 3.333e-04 | 10.0 | 7.0 | |||

| 1 | 1 | -0.04 | -0.01 | 1.167e-04 | 10.0 | 4.0 | ||||

| 0 | 0 | 0.02 | 0.08 | 3.333e-04 | 10.0 | 7.0 | ||||

| -1 | -1 | -0.04 | -0.01 | 1.167e-04 | 10.0 | 4.0 | ||||

| -2 | -2 | -0.08 | -0.02 | 3.333e-04 | 10.0 | 7.0 | ||||

| 4 | 2 | 2 | 0.02 | 0.07 | 2.500e-04 | 10.0 | 6.0 | |||

| 1 | 1 | -0.09 | -0.03 | 4.622e-04 | 10.0 | 7.0 | ||||

| 0 | 0 | 0.03 | 0.10 | 5.344e-04 | 10.0 | 8.0 | ||||

| -1 | -1 | -0.09 | -0.03 | 4.622e-04 | 10.0 | 7.0 | ||||

| -2 | -2 | 0.02 | 0.07 | 2.500e-04 | 10.0 | 6.0 | ||||

| 3 | 1 | 1 | 1 | -0.09 | -0.03 | 4.233e-04 | 10.0 | 7.0 | ||

| 0 | 0 | 0.03 | 0.11 | 6.667e-04 | 10.0 | 9.0 | ||||

| -1 | -1 | -0.09 | -0.03 | 4.233e-04 | 10.0 | 7.0 | ||||

| 3 | 3 | 3 | 0.03 | 0.09 | 4.544e-04 | 10.0 | 7.0 | |||

| 1 | 1 | -0.05 | -0.02 | 1.611e-04 | 10.0 | 4.0 | ||||

| 0 | 0 | 0.02 | 0.07 | 3.122e-04 | 10.0 | 6.0 | ||||

| -1 | -1 | -0.05 | -0.02 | 1.611e-04 | 10.0 | 4.0 | ||||

| -3 | -3 | 0.03 | 0.09 | 4.544e-04 | 10.0 | 7.0 | ||||

| 4 | 2 | 2 | 2 | 0.02 | 0.07 | 2.500e-04 | 10.0 | 6.0 | ||

| 1 | 1 | -0.09 | -0.03 | 4.622e-04 | 10.0 | 7.0 | ||||

| 0 | 0 | 0.03 | 0.10 | 5.344e-04 | 10.0 | 8.0 | ||||

| -1 | -1 | -0.09 | -0.03 | 4.622e-04 | 10.0 | 7.0 | ||||

| -2 | -2 | 0.02 | 0.07 | 2.500e-04 | 10.0 | 6.0 | ||||

| 4 | 4 | 4 | -0.10 | -0.03 | 5.167e-04 | 10.0 | 8.0 | |||

| 3 | 3 | 0.01 | 0.02 | 2.667e-05 | 10.0 | 2.0 | ||||

| 2 | 2 | 0.01 | 0.03 | 5.444e-05 | 10.0 | 3.0 | ||||

| 1 | 1 | -0.06 | -0.02 | 1.878e-04 | 10.0 | 5.0 | ||||

| 0 | 0 | 0.02 | 0.07 | 2.500e-04 | 10.0 | 6.0 | ||||

| -1 | -1 | -0.06 | -0.02 | 1.878e-04 | 10.0 | 5.0 | ||||

| -2 | -2 | 0.01 | 0.03 | 5.444e-05 | 10.0 | 3.0 | ||||

| -3 | -3 | 0.01 | 0.02 | 2.667e-05 | 10.0 | 2.0 | ||||

| -4 | -4 | -0.10 | -0.03 | 5.167e-04 | 10.0 | 8.0 | ||||

| 4 | 0 | 0 | 4 | 0 | 0 | 0.01 | 0.05 | 2.500e-04 | 9.0 | 5.0 |

| 1 | 3 | 1 | 1 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||

| 0 | 0 | 0.01 | 0.05 | 1.944e-04 | 9.0 | 5.0 | ||||

| -1 | -1 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||||

| 2 | 2 | 2 | 2 | 0.00 | 0.01 | 2.778e-05 | 9.0 | 2.0 | ||

| 1 | 1 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||||

| 0 | 0 | 0.01 | 0.05 | 1.944e-04 | 9.0 | 5.0 | ||||

| -1 | -1 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||||

| -2 | -2 | 0.00 | 0.01 | 2.778e-05 | 9.0 | 2.0 | ||||

| 4 | 2 | 2 | -0.04 | -0.00 | 1.500e-04 | 9.0 | 5.0 | |||

| 1 | 1 | -0.01 | -0.00 | 2.778e-05 | 9.0 | 2.0 | ||||

| 0 | 0 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||||

| -1 | -1 | -0.01 | -0.00 | 2.778e-05 | 9.0 | 2.0 | ||||

| -2 | -2 | -0.04 | -0.00 | 1.500e-04 | 9.0 | 5.0 | ||||

| 3 | 1 | 1 | 1 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||

| 0 | 0 | 0.01 | 0.05 | 1.944e-04 | 9.0 | 5.0 | ||||

| -1 | -1 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||||

| 3 | 3 | 3 | -0.01 | -0.00 | 2.500e-05 | 9.0 | 2.0 | |||

| 2 | 2 | -0.03 | -0.00 | 1.111e-04 | 9.0 | 4.0 | ||||

| 1 | 1 | -0.00 | -0.00 | 0.000e+00 | 9.0 | 1.0 | ||||

| 0 | 0 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||||

| -1 | -1 | -0.00 | -0.00 | 0.000e+00 | 9.0 | 1.0 | ||||

| -2 | -2 | -0.03 | -0.00 | 1.111e-04 | 9.0 | 4.0 | ||||

| -3 | -3 | -0.01 | -0.00 | 2.500e-05 | 9.0 | 2.0 | ||||

| 4 | 0 | 0 | 0 | 0.01 | 0.05 | 2.500e-04 | 9.0 | 5.0 | ||

| 2 | 2 | 2 | -0.04 | -0.00 | 1.500e-04 | 9.0 | 5.0 | |||

| 1 | 1 | -0.01 | -0.00 | 2.778e-05 | 9.0 | 2.0 | ||||

| 0 | 0 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||||

| -1 | -1 | -0.01 | -0.00 | 2.778e-05 | 9.0 | 2.0 | ||||

| -2 | -2 | -0.04 | -0.00 | 1.500e-04 | 9.0 | 5.0 | ||||

| 4 | 4 | 4 | 0.00 | 0.02 | 6.111e-05 | 9.0 | 3.0 | |||

| 3 | 3 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||||

| 2 | 2 | -0.02 | -0.00 | 3.611e-05 | 9.0 | 3.0 | ||||

| 1 | 1 | -0.01 | -0.00 | 2.500e-05 | 9.0 | 2.0 | ||||

| 0 | 0 | 0.00 | 0.03 | 7.778e-05 | 9.0 | 4.0 | ||||

| -1 | -1 | -0.01 | -0.00 | 2.500e-05 | 9.0 | 2.0 | ||||

| -2 | -2 | -0.02 | -0.00 | 3.611e-05 | 9.0 | 3.0 | ||||

| -3 | -3 | 0.00 | 0.03 | 1.000e-04 | 9.0 | 4.0 | ||||

| -4 | -4 | 0.00 | 0.02 | 6.111e-05 | 9.0 | 3.0 | ||||

| 6 | 0 | 2 | 4 | 2 | 2 | 0.00 | 0.01 | 1.111e-05 | 9.0 | 2.0 |

| 1 | 1 | 0.00 | 0.02 | 3.611e-05 | 9.0 | 3.0 | ||||

| 0 | 0 | 0.00 | 0.02 | 6.111e-05 | 9.0 | 3.0 | ||||

| -1 | -1 | 0.00 | 0.02 | 3.611e-05 | 9.0 | 3.0 | ||||

| -2 | -2 | 0.00 | 0.01 | 1.111e-05 | 9.0 | 2.0 | ||||

| 3 | 3 | 3 | 3 | 0.00 | 0.00 | 0.000e+00 | 9.0 | 1.0 | ||

| 2 | 2 | 0.00 | 0.01 | 2.500e-05 | 9.0 | 2.0 | ||||

| 1 | 1 | 0.00 | 0.02 | 3.611e-05 | 9.0 | 3.0 | ||||

| 0 | 0 | 0.00 | 0.02 | 6.111e-05 | 9.0 | 3.0 | ||||

| -1 | -1 | 0.00 | 0.02 | 3.611e-05 | 9.0 | 3.0 | ||||

| -2 | -2 | 0.00 | 0.01 | 2.500e-05 | 9.0 | 2.0 | ||||

| -3 | -3 | 0.00 | 0.00 | 0.000e+00 | 9.0 | 1.0 | ||||

| 4 | 2 | 2 | 2 | 0.00 | 0.01 | 1.111e-05 | 9.0 | 2.0 | ||

| 1 | 1 | 0.00 | 0.02 | 3.611e-05 | 9.0 | 3.0 | ||||

| 0 | 0 | 0.00 | 0.02 | 6.111e-05 | 9.0 | 3.0 | ||||

| -1 | -1 | 0.00 | 0.02 | 3.611e-05 | 9.0 | 3.0 | ||||

| -2 | -2 | 0.00 | 0.01 | 1.111e-05 | 9.0 | 2.0 | ||||

| 4 | 4 | 4 | -0.00 | -0.00 | 0.000e+00 | 9.0 | 1.0 | |||

| 3 | 3 | -0.01 | -0.00 | 2.778e-05 | 9.0 | 2.0 | ||||

| 2 | 2 | -0.01 | -0.00 | 2.500e-05 | 9.0 | 2.0 | ||||

| 1 | 1 | 0.00 | 0.00 | 0.000e+00 | 8.0 | 1.0 | ||||

| 0 | 0 | 0.00 | 0.01 | 2.500e-05 | 9.0 | 2.0 | ||||

| -1 | -1 | 0.00 | 0.00 | 0.000e+00 | 8.0 | 1.0 | ||||

| -2 | -2 | -0.01 | -0.00 | 2.500e-05 | 9.0 | 2.0 | ||||

| -3 | -3 | -0.01 | -0.00 | 2.778e-05 | 9.0 | 2.0 | ||||

| -4 | -4 | -0.00 | -0.00 | 0.000e+00 | 9.0 | 1.0 | ||||

| 8 | 0 | 4 | 4 | 4 | 4 | 0.00 | 0.00 | 0.000e+00 | 3.0 | 1.0 |

| 3 | 3 | 0.00 | 0.00 | 0.000e+00 | 9.0 | 1.0 | ||||

| 2 | 2 | 0.00 | 0.00 | 0.000e+00 | 9.0 | 1.0 | ||||

| 1 | 1 | 0.00 | 0.01 | 2.778e-05 | 9.0 | 2.0 | ||||

| 0 | 0 | 0.00 | 0.01 | 2.778e-05 | 9.0 | 2.0 | ||||

| -1 | -1 | 0.00 | 0.01 | 2.778e-05 | 9.0 | 2.0 | ||||

| -2 | -2 | 0.00 | 0.00 | 0.000e+00 | 9.0 | 1.0 | ||||

| -3 | -3 | 0.00 | 0.00 | 0.000e+00 | 9.0 | 1.0 | ||||

| -4 | -4 | 0.00 | 0.00 | 0.000e+00 | 3.0 | 1.0 |

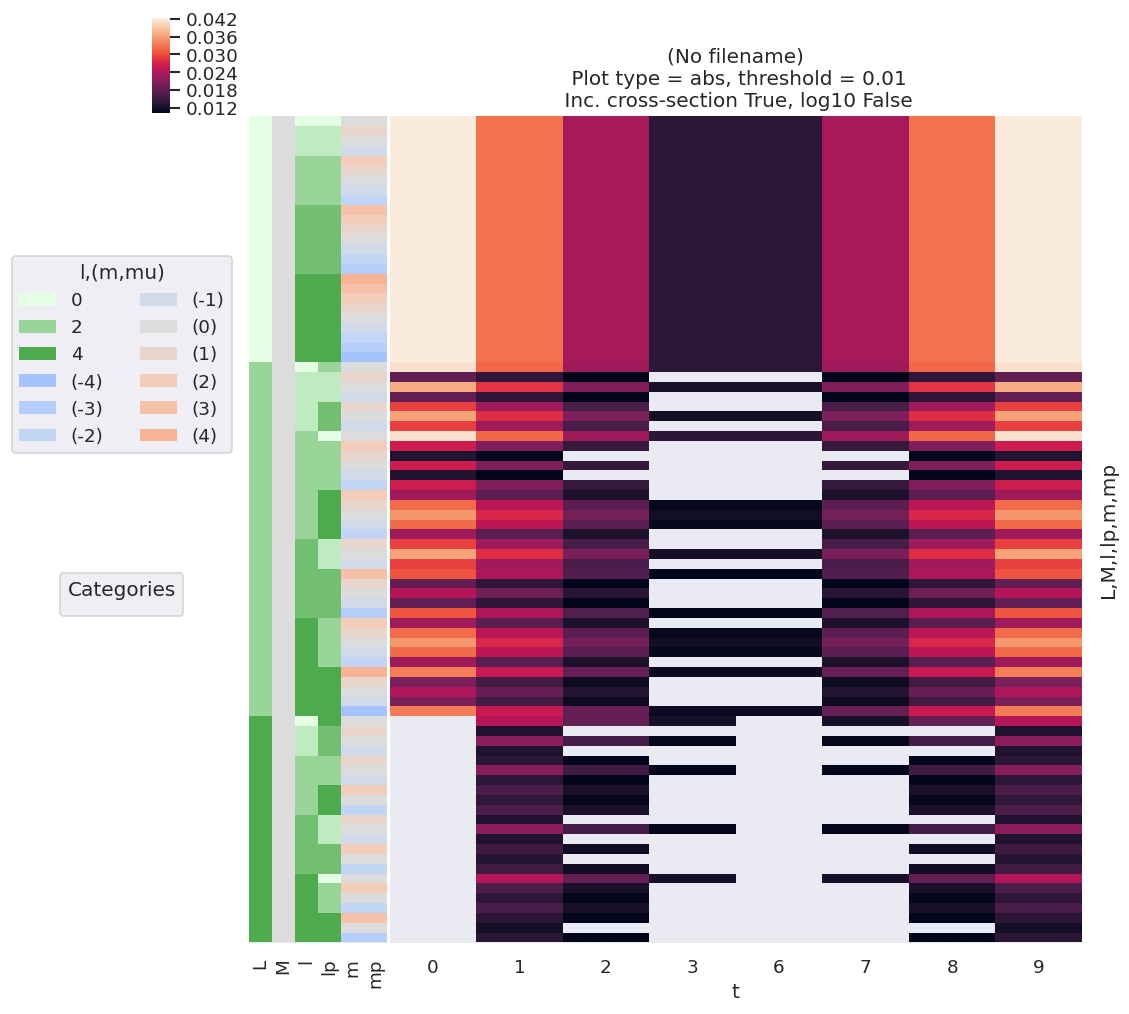

For the higher-dimensional case, it is useful to plot terms relative to all quantum numbers. For example, in a similar manner to the basis set explorations of Sect. 3.3.9, related properties such as the distance from the mean can be examined with lmPlot(). And, as previously demonstrated, other properties, such as the covariance, may be examined and plotted.

# channelFuncsSubsetPD.transform(demean,axis='columns')

# cmap=None # cmap = None for default. 'vlag' good?

# cmap = 'vlag'

# De-meaned channel functions

channelFuncsDemean = channelFuncsSubsetPD.transform(demean,axis='columns')

# Plot using lmPlot routine - note this requires conversion to Xarray data type first.

daPlot, daPlotpd, legendList, gFig = ep.lmPlot(channelFuncsDemean.to_xarray().to_array('t')

, xDim='t', cmap=cmap, mDimLabel='m');

Set dataType (No dataType)

Plotting data (No filename), pType=a, thres=0.01, with Seaborn

No artists with labels found to put in legend. Note that artists whose label start with an underscore are ignored when legend() is called with no argument.

# Full covariance mapping along all dims

%matplotlib inline

sns.clustermap(channelFuncsSubsetPD.T.cov().fillna(0)) #.round(3))

<seaborn.matrix.ClusterGrid at 0x7f6656cf5930>